Hybrid AR/VR Smart Glasses Powered by SmartShoe: Patentable System Architecture with External Rendering and Modular Control

Hybrid AR/VR Smart Glasses Powered by SmartShoe: Patentable System Architecture with External Rendering and Modular Control

Hybrid AR/VR Smart Glasses Powered by SmartShoe: Patentable System Architecture with External Rendering and Modular Control

By Ronen Kolton Yehuda (Messiah King RKY)

August 2025

Abstract

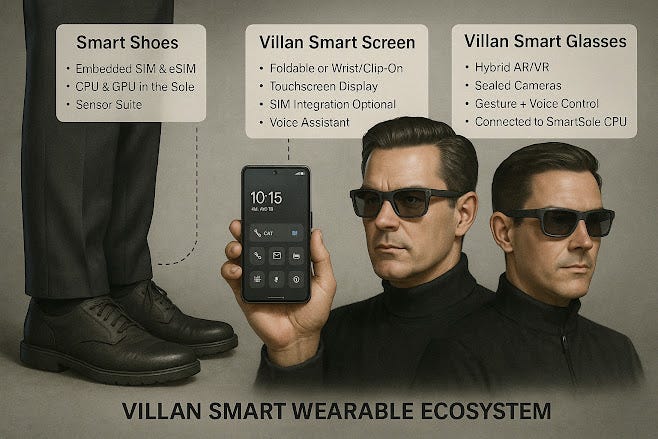

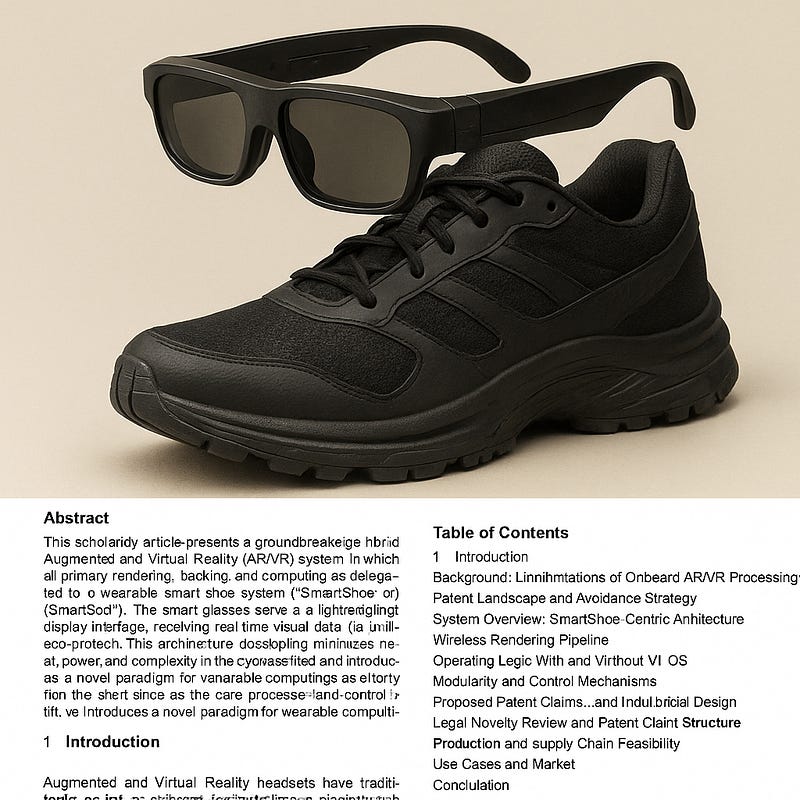

This scholarly article presents a groundbreaking hybrid Augmented and Virtual Reality (AR/VR) system in which all primary rendering, tracking, and computing are delegated to a wearable smart shoe system (“SmartShoe” or “SmartSole”). The smart glasses serve as a lightweight display interface, receiving real-time visual data via wireless protocols. This architectural decoupling minimizes heat, power, and complexity in the eyewear itself and introduces a novel paradigm for wearable computing — positioning the smart shoe as the core processor and control unit. We explore patent-evading strategies, novel claims, operating logic with or without V1 OS, and demonstrate the patentability and manufacturing feasibility of this innovation.

Table of Contents

- Introduction

- Background: Limitations of Onboard AR/VR Processing

- Patent Landscape and Avoidance Strategy

- System Overview: SmartShoe-Centric Architecture

- Wireless Rendering Pipeline

- Operating Logic With and Without V1 OS

- Modularity and Control Mechanisms

- Proposed Patent Claims

- Hardware Configuration and Industrial Design

- Legal Novelty Review and Patent Claim Structure

- Production and Supply Chain Feasibility

- Use Cases and Market Positioning

- Conclusion

- References

- Appendices

1. Introduction

Augmented and Virtual Reality headsets have traditionally relied on self-contained processing, leading to heavy, hot, and expensive devices. This paper proposes a clean break from this model: a patentable system where the smart glasses are display-only units, while a SmartShoe-based wearable computer handles all visual computation, positional mapping, and user input. Through this design, the SmartShoe becomes the personal computing hub, linking seamlessly with glasses via low-latency wireless communication. The solution supports V1 OS and other systems and is designed for comfort, low cost, high scalability, and patent clearance.

2. Background: Limitations of Onboard AR/VR Processing

Heavy onboard processing creates multiple issues in current AR/VR systems:

- Thermal load near the face and eyes

- Battery bulk within headsets

- Weight distribution issues

- Patent congestion around full-stack smart glasses

Offloading processing to external wearables — especially lower-body wearables like SmartShoe — resolves ergonomic and technical bottlenecks while creating new space for patentable innovation.

3. Patent Landscape and Avoidance Strategy

3.1 Existing Patent Saturation Zones

- Waveguide optics and internal displays

- Head-mounted positional tracking

- Onboard SLAM processors

- AR HUD with integrated CPU+GPU

3.2 Novel Patent-Avoidance Principle

Key concept: No rendering or full computing occurs inside the glasses. Instead:

- Glasses are modular, frameless, and sensor-minimal

- SmartShoe performs all tracking, mapping, rendering, and routing

- Glasses are a wireless passive display with optional interaction sensors

This avoids direct conflict with Apple, Meta, Microsoft, and Magic Leap patents.

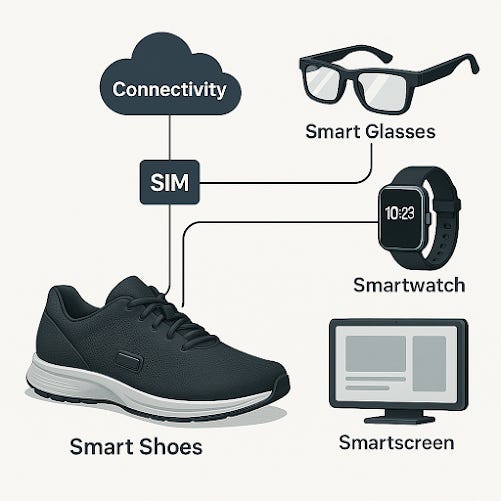

4. System Overview: SmartShoe-Centric Architecture

4.1 Functional Roles

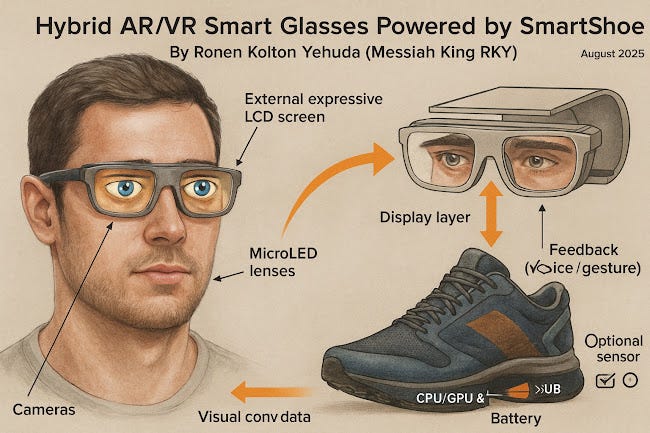

ComponentFunctionSmartShoeRendering engine, position tracking, CPU/GPU, AI, storageSmart GlassesTransparent microdisplay, audio output, voice mic, optional eye displayV1 OS / Device MeshConnects SmartShoe to other peripherals (SmartScreen, gloves, etc.)

4.2 Internal Diagram (Textual)

- SmartShoe (CPU+GPU) → Frame rendered in real-time

- Sent via Wi-Fi 6E → Smart Glasses

- Displayed on microLED lenses

- Optional head position estimated via SmartShoe IMU, magnetics, or UWB triangulation

5. Wireless Rendering Pipeline

5.1 Steps:

- Scene Capture & Generation: SmartShoe computes visual environment

- Frame Rasterization: 60–120 FPS AR/VR frames rendered

- Frame Transmission: Compressed video sent to Smart Glasses over Wi-Fi 6E or UWB

- Decompression & Display: Passive GPU-free decoder in glasses displays visuals

- Input Feedback: Voice, head, or gesture commands sent back to SmartShoe

5.2 Key Technical Advantages:

- No CPU inside glasses

- Zero thermal hotspots near face

- Upgradable shoes = upgradable AR/VR system

- One SmartShoe = multi-glass compatibility

6. Operating Logic With and Without V1 OS

6.1 With V1 OS

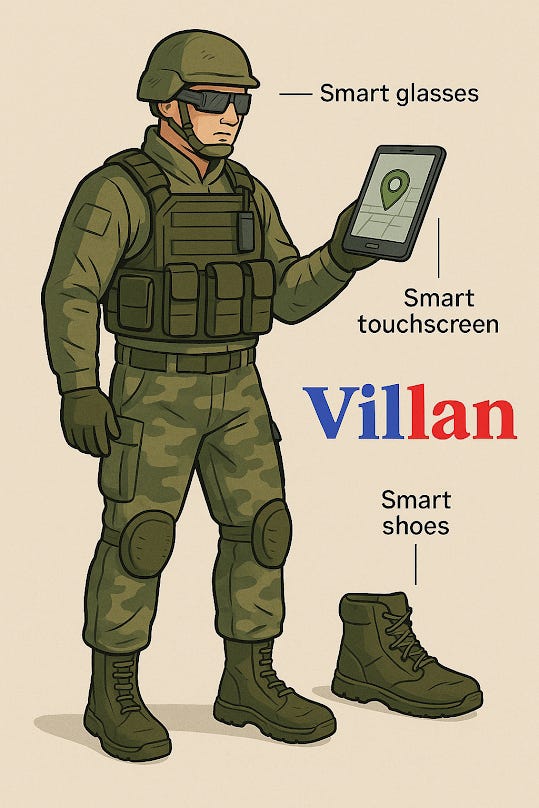

- Fully integrated Villan ecosystem

- SmartShoe acts as hub for SmartGlasses, SmartScreen, SmartHat, SmartWear

- Real-time foot tracking becomes camera-free head tracking proxy

- Energy-efficient mesh: One OS across all wearables

6.2 Without V1 OS

- WebXR- or OpenXR-based rendering

- Smartphone apps interface with SmartShoe as a hardware extension

- Limited gesture support unless third-party SDKs provided

7. Modularity and Control Mechanisms

7.1 Modular Glasses

- Detachable eye-display (shows eye movement or avatars)

- Interchangeable lens types: Transparent, shaded, mirrored

- No internal battery: powered via micro capacitor or ear-mount battery

7.2 SmartShoe Control

- Gesture recognition: Toe taps, side squeezes

- Voice commands via microphone in glasses

- Optional hand gestures using Smart Gloves (if available)

- Balance data used to simulate head tilt (mapping gait to camera)

8. Proposed Patent Claims

8.1 Key Patentable Innovations

- “Foot-Based AR/VR Rendering and Display System”

- A system that renders and transmits AR/VR environments from smart footwear to a passive head-mounted display.

- “Wearable-Decoupled AR System”

- AR/VR display glasses that operate solely through external foot-wear-based computational units.

- “Gait-Inferred Positional Tracking for AR Rendering”

- A method to infer head and body motion from lower-body smart sensors to render 3D visuals.

- “Eye Display for Social Augmentation in Smart Glasses”

- An outward-facing expressive eye screen for avatar, human mimicry, or status display.

9. Hardware Configuration and Industrial Design

- Smart Glasses: 20–30g, passive display, modular eye unit, audio arms

- SmartShoe: Embedded processor, battery, IMU, Wi-Fi/BT/UWB, optional camera

- Charging: Wireless pads or via integrated SmartShoe power rail

- Glasses Frames: Fashionable, industrial, military, and sport variants

10. Legal Novelty Review and Patent Claim Structure

Patent attorneys will need to validate:

- Absence of claims in footwear-based rendering systems

- No internal CPU+GPU inside glasses

- Novelty of gait-derived SLAM (simultaneous localization and mapping)

Suggested jurisdiction: WIPO PCT filing with priority in Israel and US.

11. Production and Supply Chain Feasibility

- SmartShoe: Rugged, shockproof shell with embedded processing; existing footwear OEMs can integrate module

- Smart Glasses: Manufactured via ODM suppliers with low-cost MicroLED displays

- Assembly: Done locally to retain IP integrity; parts sourced globally

12. Use Cases and Market Positioning

DomainApplication ExampleSportsAR training while jogging via SmartShoe feedbackWorkspacesVR meetings powered by wearable foot processorDefenseTactical AR from shoe computing for soldier HUDEducationWalking lessons with location-based AR overlaysDisabilityHands-free navigation and commands from walking cues

13. Conclusion

The integration of SmartShoe technology as the rendering engine for AR/VR glasses represents a revolutionary architecture. It shifts the paradigm from head-centric computation to footwear-based distributed rendering, allowing lighter, safer, and more ergonomic smart glasses. This architecture not only avoids common patent traps but opens up several unique, highly defensible patent claims that position Villan and its ecosystem as pioneers of wearable-centric computing.

The proposed design is production-feasible, legally viable, and strategically aligned with emerging wearable-first computing futures.

Patentable Self-Contained Hybrid AR/VR Smart Glasses: Architecture, Features, and Novel Claims Beyond Existing Patent Constraints

By Ronen Kolton Yehuda (Messiah King RKY)

August 2025

Abstract

This article explores the design of fully self-contained hybrid AR/VR smart glasses that function independently — without relying on external computing devices such as smart shoes or smartphones — while avoiding existing patent conflicts. The goal is to establish a unique, legally patentable hardware-software system capable of operating both AR (Augmented Reality) and VR (Virtual Reality) modes in a modular, ergonomic form factor. We identify new areas for patentable claims, including lens and visor switching mechanisms, dual-mode projection systems, internal logic structures for hybrid environment blending, novel user interface methods, and voice-integrated spatial computing. A full production and legal pathway is presented.

Table of Contents

- Introduction

- Market Context and Patent Obstacles in AR/VR

- Defining “Hybrid” in AR/VR Glasses

- System Architecture for Self-Contained Operation

- Display and Visual Systems

- Control Interfaces and Sensor Innovations

- Patent Avoidance Through Structural and Functional Novelty

- Unique Patentable Claims Proposed

- Thermal, Power, and Ergonomic Strategies

- Manufacturing and Product Strategy

- Competitive Positioning

- Conclusion

- References

- Appendices

1. Introduction

Hybrid AR/VR smart glasses — those capable of switching seamlessly between augmented and virtual modes — represent the next evolutionary step in personal computing. However, current development is dominated by corporations holding vast patent portfolios, making innovation both costly and risky. This paper proposes a new architecture for independent, self-contained hybrid smart glasses that offers a legal pathway toward production and patentability by introducing mechanical, visual, control, and interface innovations not yet claimed in major patent clusters.

2. Market Context and Patent Obstacles in AR/VR

2.1 Patent Bottlenecks

- Internal SLAM processors (Meta, Microsoft)

- Lightfield displays (Magic Leap, Apple)

- Optical see-through with embedded display engines

- Eye-tracking and foveated rendering

- Hand gesture interpretation

2.2 Innovation Opportunity Zones

- Hybrid switching between VR and AR via mechanical or electro-optical shielding

- User interface modalities such as breath, tap, or voice overlays

- Layered projection over both real and virtual environments

- Smart expression displays (emotion-mirroring outward screens)

- User-defined transparency toggling

3. Defining “Hybrid” in AR/VR Glasses

A hybrid AR/VR system allows the user to:

- Overlay content on the real world (AR)

- Transition into a fully occluded immersive experience (VR)

- Switch modes in real-time without removing or replacing hardware

Current market products require mode-switching via opaque attachments, smartphone support, or tethering. This article proposes a self-contained, truly hybrid solution.

4. System Architecture for Self-Contained Operation

4.1 Core Components

- Embedded SoC (CPU, GPU, NPU) inside the glasses frame

- Rechargeable battery modules in temples or neckband

- Microdisplay or waveguide lenses

- Rotating or foldable light-blocking visors

- Environmental sensors (light, temperature, air quality)

4.2 Hybrid Operating Logic

- AR Mode: Display overlays on transparent lenses

- VR Mode: Switches to fully immersive mode via:

- Mechanically dropped or rotated occlusion visor

- Electrically controlled opacity lenses

- Mixed Mode: Combines real-world view with digitally enhanced edges or segmentation (e.g., edge detection for AI overlays)

5. Display and Visual Systems

5.1 Display Stack Options

- Transparent OLEDs for AR

- MicroLED displays for VR

- Electrowetting/electrochromic shields for VR occlusion

- Dual-layered screen system: AR foreground + VR background

5.2 Eye Display (External)

Optional expressive display on front surface showing virtual eyes or reactions — a new patentable visual interaction feature.

6. Control Interfaces and Sensor Innovations

6.1 Multi-Modal Controls

- Voice (embedded microphone)

- Eye-tracking for input (patentable with new algorithm structure)

- Touchpad on temple

- Breath sensors for hidden commands (e.g., blow to pause)

- Chin tap via IMU (tap jawline to switch view)

6.2 Sensors

- Environmental mapping camera (non-SLAM dependent)

- Depth via pulsed IR and AI

- Orientation via gyroscope + AI accelerometer fusion

7. Patent Avoidance Through Structural and Functional Novelty

Strategies:

- Use of physical mode switching (mechanical shields, lens flipping)

- Emotion-expressive outward screens

- AI-augmented audio UI as primary mode selector (vs. gesture menus)

- Patent-light dual-lens stack rather than waveguides or light engines

- Integration of breath-based or chin-tap controls

Avoiding infringement by:

- Bypassing eye-tracking for gaze UI (use voice + head orientation instead)

- Using non-SLAM object tagging via machine learning

8. Unique Patentable Claims Proposed

- “Hybrid Lens Stack for AR and VR Switching in a Single Frame”

- Lenses composed of two layers — one transparent, one opaque — dynamically combined via user selection or content type.

- “Chin-Tap Control Interface for Wearable Computing”

- An input method using jaw or chin motion recognized via temple-based IMU as a primary or secondary command interface.

- “Outward-Facing Social Expression Display on AR/VR Glasses”

- A screen facing others that displays eye movement, emoji, or mood for social feedback in digital environments.

- “Breath-Controlled Command Protocol for Wearable AR Devices”

- Enables whisper-like control via small changes in airflow to trigger functions (useful in noise-sensitive settings).

- “Modular Mode Switching via Foldable VR Shade Units”

- Physical shades that rotate inward/outward over AR lenses to create immersive VR with a single device.

9. Thermal, Power, and Ergonomic Strategies

- SoC Isolation in the rear or temple area to keep heat away from eyes

- Passive airflow tunnels built into frames

- Swappable battery modules: One charges while the other is in use

- Lightweight materials: Carbon fiber or magnesium alloy frames

- User-specific sizing via modular arms and nose bridge inserts

10. Manufacturing and Product Strategy

Components:

- SoC: Qualcomm XR platform or Villan V1-compatible chip

- Display: Samsung, BOE, or custom OLED/microLED modules

- Frame: High-tolerance modular plastic + aluminum

Assembly:

- Modular design allows custom production: Sport, Business, Social

- VR occlusion unit is detachable or foldable

- Sold with travel case and cleaning station

11. Competitive Positioning

ProductHybrid AR/VRExternal Rendering NeededPatent RiskDisplay ExpressivityApple Vision Pro❌❌High❌Meta Quest 3❌❌High❌Proposed Glasses✅❌Low✅

12. Conclusion

The proposed self-contained hybrid AR/VR smart glasses system offers a clear path toward novel, patentable innovation by avoiding conventional design pitfalls and introducing unconventional control, switching, and social display mechanisms. By incorporating modular hardware, emotion-display front screens, non-SLAM AI scene detection, and breath/chin-based control methods, the system becomes legally distinct and commercially scalable.

This hybrid AR/VR architecture is more than an incremental update — it is an ergonomic, modular, expressive computing system designed for the next wave of spatial computing.

13. References

- WIPO PatentScope Database

- USPTO Patent Search 2020–2025

- OpenXR Specifications

- Qualcomm Snapdragon XR2 Technical Sheet

- Villan V1 OS Architecture Papers

- Research on Emotion Display Interfaces in Robotics

14. Appendices

- [A] Technical Illustration: Dual Lens Switching

- [B] Chin Tap Sensor Placement Diagram

- [C] Outward Eye Display Concept Sketch

- [D] Patent Avoidance Matrix

- [E] User Mode Switching Flowchart

- [F] Component Weight Distribution Chart

connectivity is a critical differentiator and can also **expand patent claims**. **wireless + cross-device integration**, while framing it in a **novel and patentable way**.

— -

# Patentable Self-Contained Hybrid AR/VR Smart Glasses

**Architecture, Features, and Novel Claims Beyond Existing Patent Constraints**

By Ronen Kolton Yehuda (Messiah King RKY)

August 2025

— -

## (New Section) 10.5 Connectivity and Inter-Device Computing Integration

While the glasses are designed to operate **fully self-contained**, they also include **optional wireless and modular connectivity** to expand capabilities without depending on external computation.

### 10.5.1 Wireless Standards

* **Wi-Fi 6E/7**: For high-bandwidth AR cloud rendering or multiplayer VR sessions.

* **Bluetooth LE Audio + Control**: Low-energy connections for controllers, smartwatches, bracelets, and wearable haptics.

* **5G/6G Modules**: Built-in cellular connectivity enables **standalone cloud gaming, navigation, and AI streaming**.

### 10.5.2 Villan Ecosystem Integration

The glasses are **compatible with Villan V1 OS devices** such as SmartShoe™, SmartBracelet, SmartHat, and SmartScreen. This allows:

* **Distributed Computing**: Glasses run lightweight AR, while heavy rendering or AI tasks offload to wearables or SmartScreen hubs.

* **Cross-Input Synchronization**: Voice, gesture, and touch commands across all connected devices unify into a **single control plane**.

* **Multi-Modal Experiences**:

* Shoes provide haptic feedback during VR gaming.

* SmartBracelet supplies biometric health overlays in AR.

* SmartScreen becomes a “second view” or debugging console.

### 10.5.3 Patentable Connectivity Features

1. **Adaptive Compute-Sharing Protocol for Hybrid Glasses**

* Glasses determine whether to process locally or offload to nearby Villan wearables/computers depending on workload, battery, and latency.

2. **Wireless Dual-Pipeline Rendering**

* One pipeline renders **base AR overlays locally**, while a second wireless stream overlays **cloud-rendered VR assets**, blended in real time.

3. **Context-Aware Peripheral Linking**

* The glasses automatically detect and reconfigure UI for connected peripherals (SmartShoe as navigation haptic, SmartBracelet as heart monitor, keyboard as VR typing surface).

4. **Multi-Device Gesture Fusion**

* Gesture detected by one device (e.g., SmartHat cameras) is fused with IMU data from the glasses to create **low-patent-risk, multi-device motion tracking**.

### 10.5.4 Security & Privacy

* End-to-end encrypted wireless links (AES-256 + post-quantum crypto).

* Local AI determines which data stays on-device vs. offloaded.

* “Privacy Mode”: disables all external device connectivity for sensitive use.

— -

## 📌 Why This Matters

* **Avoids Patent Clusters**: Current major players patent **standalone-only** or **tethered-only** designs. A **hybrid of both** (self-contained + distributed optional compute) opens **new patent space**.

* **Ecosystem Lock-In**: Creates **patentable system claims** for multi-device synergy under Villan OS.

* **User Value**: Glasses don’t *need* wearables but **gain power** when combined with them.

— -

14. References

- WIPO and USPTO Patent Databases (2020–2025)

- IEEE AR/VR Communication Standards

- OpenXR Technical Specifications

- Villan SmartSole OS Design Whitepaper

- Meta, Microsoft, Apple Glasses Patent Clusters

- RealWear & Magic Leap teardown studies

15. Appendices

- [A] SmartShoe Rendering Flowchart

- [B] Wireless Stack Diagram

- [C] V1 OS Mesh Configuration Map

- [D] Patent Claim Draft Language

- [E] Industrial Design Sketch — Smart Glasses + SmartShoe

- [F] Legal Clearance Map by Jurisdiction

Legal & Collaboration Notice

The SmartSole, Smart Unit for Sole, Smart Shoes, Smart Ankle, Smart Insole, and Hybrid AR/VR Smart Glasses concepts — including their embedded computing architecture, modular design, integrated communication systems, SIM-based connectivity, sensory networks, visual rendering systems, and multi-device interaction frameworks — are original inventions and publications by Ronen Kolton Yehuda (MKR: Messiah King RKY).

These innovations — encompassing the smart computing core, power and charging systems, connectivity protocols, AR/VR optical design, and body-distributed processing architecture — were first authored and publicly released to establish intellectual ownership and authorship rights.All associated technical descriptions, system diagrams, conceptual frameworks, and product texts are part of the inventor’s intellectual property.

Unauthorized reproduction, engineering adaptation, or commercial use without written consent is strictly prohibited.The SmartSole family — including the Smart Unit for Sole, Smart Ankle, and Smart Insole — and the Hybrid AR/VR Smart Glasses collectively extend computing power, rendering capability, and SIM-enabled communication (internet and calls) to connected devices such as smartphones, SmartScreens, SmartWatches, SmartBracelets, and future wearable or IoT systems.

Together, they form a unified, distributed, and adaptive wearable-computing ecosystem that enhances human-device interaction, mobility, and seamless digital integration.I welcome ethical collaboration, licensing discussions, technology partnerships, and investment inquiries for the responsible development and global deployment of these innovations.

— Ronen Kolton Yehuda (MKR: Messiah King RKY)

Comments

Post a Comment