Human-Robot Hybrid Agents: The Future of Soldiers, Police, Rescue and Firefighters (In context of Smartshoes, smarthelmet/hat, smartglasses)

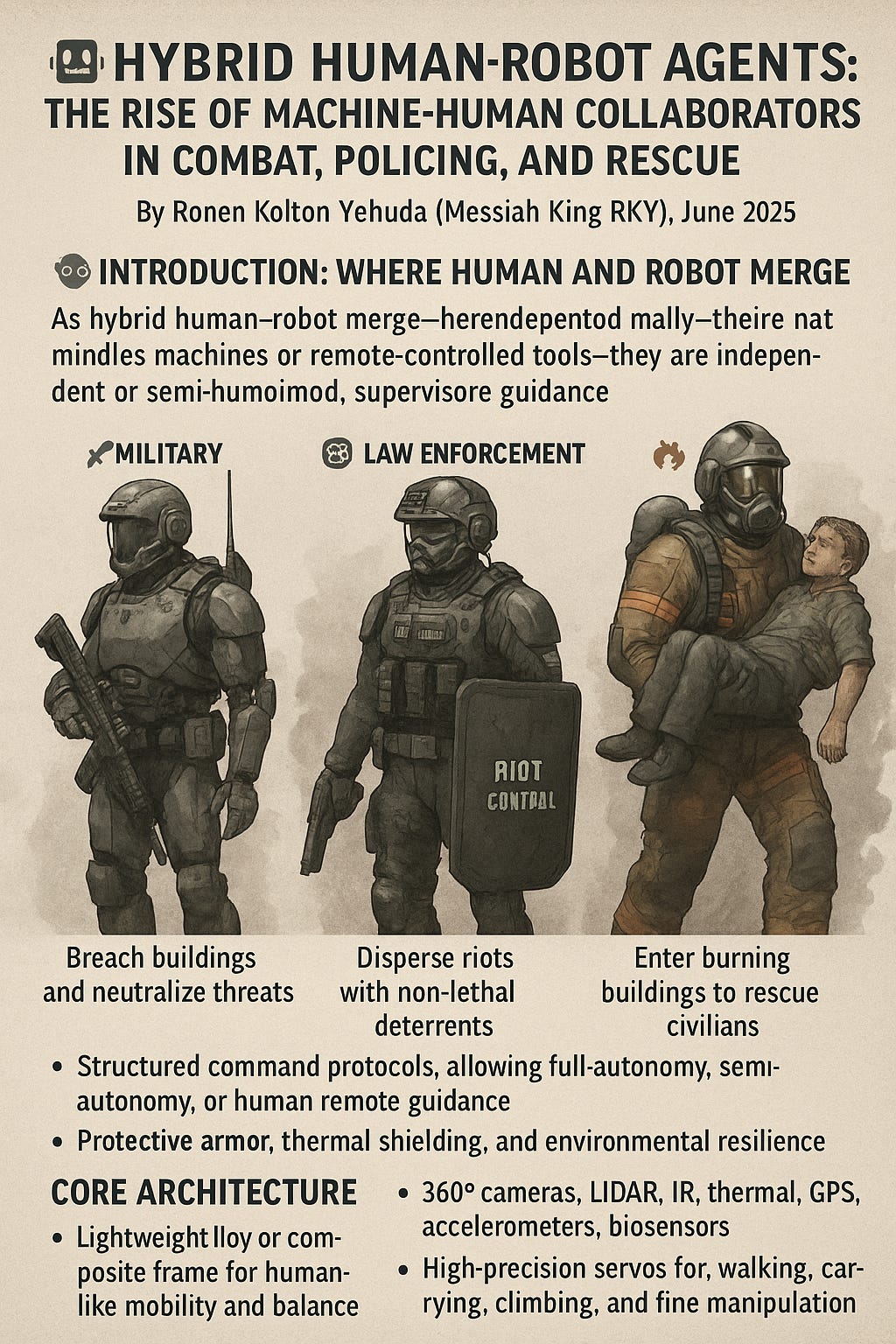

🤖 Hybrid Human-Robot Agents: The Rise of Machine-Human Collaborators in Combat, Policing, and Rescue

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

🧠 Introduction: Where Human and Robot Merge

As global challenges grow more complex—conflict zones, natural disasters, urban collapse—the frontline must evolve. Human limitations in heat, fatigue, and cognitive load call for a new operational partner: the Hybrid Human-Robot Agent.

These are not mindless machines or remote-controlled tools—they are independent robotic systems with embedded logic, modular autonomy, and supervised cooperation. Designed to handle harsh, high-risk environments, hybrid agents are transforming how we fight wars, maintain order, and save lives.

🔧 What Is a Hybrid Human-Robot Agent?

A Hybrid Human-Robot Agent (HHRA) is a humanoid or semi-humanoid machine with:

-

AI-embedded reasoning, built on human ethics and real-time feedback

-

Full mobility, including running, walking, climbing, and object manipulation

-

Protective armor, thermal shielding, and environmental resilience

-

Structured command protocols, allowing full-autonomy, semi-autonomy, or human remote guidance

They’re deployed to perform tasks deemed too dangerous, repetitive, or exhausting for human teams—while still communicating, coordinating, and adapting alongside them.

🧱 Core Architecture of a Hybrid Agent

| Component | Function |

|---|---|

| Chassis & Skeleton | Lightweight alloy or composite frame for human-like mobility and balance |

| Power System | Internal battery (4–10 hrs) with optional external pack or hydrogen cartridge |

| AI Processor Core | Edge-based decision engine with real-time mission updates |

| Sensor Array | 360° cameras, LIDAR, IR, thermal, GPS, accelerometers, biosensors |

| Actuators | High-precision servos for walking, carrying, climbing, and fine manipulation |

| Safety System | Fireproofing, explosion dampening, fail-safe shutdown, GPS kill switch |

Each agent is modular—different arms, heads, shields, and leg systems can be swapped in minutes based on the mission (urban policing, military breach, rescue, or fire zone).

🛡️ Applications by Sector

⚔️ Military

Hybrid agents enter hostile zones to:

-

Breach buildings and neutralize threats

-

Transport wounded under fire

-

Scan for traps or radiation

-

Maintain 24/7 surveillance posts

-

Operate in CBRN (chemical, biological, radiological, nuclear) zones

Their logic system can comply with rules of engagement, avoid civilian harm, and accept real-time override by commanding officers.

🚓 Law Enforcement & Riot Control

In dense urban environments, hybrid units:

-

Disperse riots using non-lethal deterrents

-

Identify and intercept armed threats

-

Perform building sweeps with minimal risk to officers

-

Support K9 units with sensor analytics

-

Guard embassies, borders, and airports

Their presence alone can de-escalate volatile protests, and their ability to resist provocation reduces risk of police overreaction.

🔥 Firefighting and Thermal Disaster Response

Hybrid agents are revolutionizing firefighting with the ability to enter burning buildings, withstand extreme temperatures, and rescue people faster than human crews.

Capabilities include:

-

High-temperature resistance up to 800°C

-

Infrared vision through smoke and walls

-

Carrying victims or heavy debris

-

Deploying fire retardants or opening pathways

-

Acting as mobile communication relays when radio fails indoors

They’re ideal for:

-

Residential fires with unknown occupants

-

Chemical plant incidents

-

Wildfires with poor visibility

-

Aircraft and train crash response

Hybrid firefighters don’t replace human crews—they enter first to map, assess, and clear paths for human teams or carry out rescues when collapse is imminent.

🌪️ Disaster Response

In floods, earthquakes, or collapsed cities:

-

Hybrid agents use drone integration to survey ruins

-

Deploy lifelines and oxygen kits

-

Scan for buried heartbeats

-

Break concrete or lift steel without fatigue

Their mobility allows them to reach trapped individuals, carry supplies, and provide night-long support in remote or devastated regions.

📡 AI Command & Collaboration

Hybrid agents are never rogue—they’re embedded into human systems through:

-

Command tablets or AR glasses

-

Voice and gesture communication

-

Behavior boundaries and override protocols

-

Shared tactical awareness between human teams and agents

Agents can either act autonomously or take direct human direction—depending on the complexity and sensitivity of the situation.

🔐 Ethics and Governance

To ensure safety and trust:

-

All hybrid agents are locked to mission scope (no lethal override outside war zones)

-

Encrypted logs of all activity are stored and monitored

-

Transparency indicators flash red or green depending on current mode (e.g., active, standby, remote-controlled)

-

Emergency teams can disable the agent via manual circuit or remote kill-switch

International standards are under development to regulate hybrid agent deployment in civilian areas and humanitarian zones.

🧩 Future of Hybrid Agents

As sensors get smaller, processors more powerful, and battery life longer, hybrid agents will gain:

-

Enhanced dexterity (surgery-level hands)

-

Emotional response modules for working with children, the elderly, or PTSD survivors

-

Multi-agent swarm logic, allowing them to cooperate like rescue dogs or fire crews

-

AI-on-chip ethics engines for real-time moral calculations

The end goal is not to remove humans—but to protect them, to extend their capabilities, and to save lives where no human could survive alone.

🛠 Final Word: The Protected Human, the Responsible Machine

The Hybrid Human-Robot Agent is more than a machine. It’s a trained partner, a tireless helper, and a shield in places no human should go alone.

From fire to war, rubble to riot—these agents step in when seconds count and lives hang in the balance. What we give them is not just power, but purpose. What they return is protection.

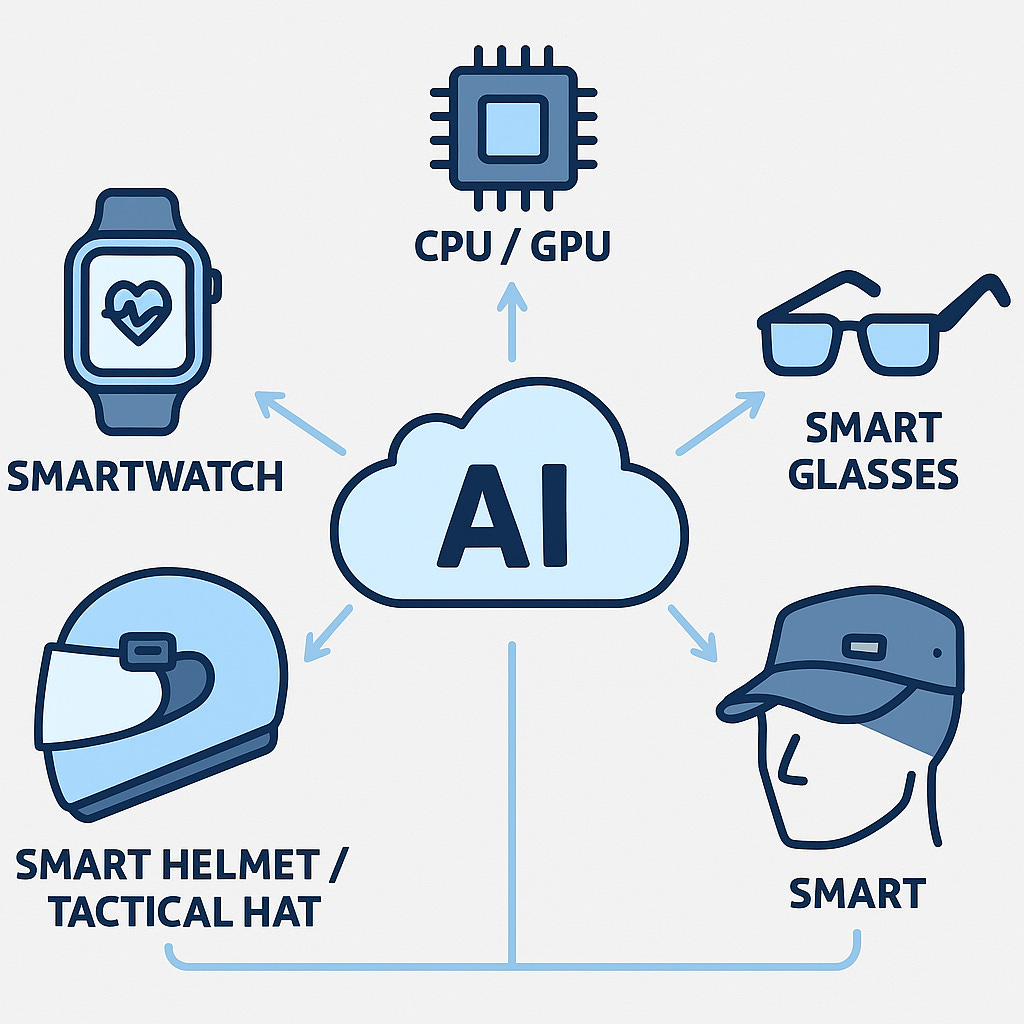

🤖 Human-Robot Hybrid Agents: Enhanced by Smart Wearables and Embedded Intelligence

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

🔧 Introduction: Wearable Robotics for the Hybrid Frontline

Modern threats—whether military, criminal, industrial, or environmental—demand superhuman performance. Human-Robot Hybrid Agents (HRHAs) represent a revolutionary evolution in frontline roles. These are not robots pretending to be humans, nor are they cyborgs. They are trained humans equipped with wearable robotics and AI-integrated devices that turn every limb, step, and signal into a coordinated mission asset.

This article explores the expanded wearable ecosystem—smart helmets, hats, shoes, belts, bracelets, and external tools—that empower the hybrid agent of tomorrow.

🧠 Human-Robot Hybrid Wearables: A Distributed Intelligence Network

Each wearable operates as an autonomous module yet synchronizes seamlessly through encrypted AI mesh networks. Together, they provide:

-

Tactical awareness

-

Environmental adaptation

-

Health monitoring

-

Command input/output

-

Mission-specific augmentation

🪖 Smart Helmets and Tactical Hats

The helmet serves as the main control center. Features include:

-

HUD with thermal, night, and drone vision

-

Facial recognition, danger flagging, mission overlays

-

Voice, eye-tracking, and neural command input

-

Audio processing with real-time translation

-

Interchangeable visor (low-light, riot, blackout)

Lightweight tactical hats are used in low-profile scenarios and civilian rescue missions. These include:

-

Directional microphones

-

Low-visibility cameras

-

AR display bands over the visor

“The helmet is no longer just armor—it’s mission control, communication hub, and tactical eye in one.”

👟 Smart Combat Shoes

Often overlooked, smart shoes provide both physical stability and tactical intelligence:

-

Internal computing module with AI-guided pathfinding

-

Gait detection and terrain feedback

-

Self-adjusting tension and noise-suppression

-

GPS+IMU for real-time location tracking

-

Emergency haptics and balance recovery assist

Shoes are connected to health AI for fatigue monitoring, shock absorption, and injury alerts.

🛡️ Smart Belts and Waist Units

Smart belts are central to energy, utility, and AI coordination:

-

Power distribution to all wearables

-

Quick-deploy holsters for drones, tools, or restraint gear

-

Inertial and rotation sensors for fall detection and center-of-gravity control

-

Embedded microprocessors for distributed AI logic

Some belts include on-body encryption processors for mission logs and cyber protection.

🔗 Smart Bracelets and Armwear

Wrist-mounted systems function as:

-

Silent alert receivers via vibration pulses

-

Biometric scanners (BP, HR, oxygen, stress)

-

Voice-controlled task launchers

-

Quick-scan drones or sensor relay points

Smart bracelets often serve as emergency control overrides in field environments.

🧩 External Smart Devices and Attachments

The modular nature of hybrid agents allows for mission-specific customization:

| Module | Function |

|---|---|

| Shoulder Drone Dock | Stores and auto-deploys quadcopters for recon or surveillance |

| Backpack Battery Units | Extended 8–12 hour power supply |

| Side-Mounted Tool Arms | Foldable robotic arms for medical, rescue, or construction use |

| Sensor Pods | For radiation, gas, or environmental analysis |

| Thermal Packs | Internal temperature regulation (hot/cold environments) |

"Hybrid agents are mobile ecosystems—not just operators."

🌐 Embedded AI Integration

Each wearable integrates into a mesh system controlled by:

-

Body AI Core: Decides posture, assist levels, and threat signals

-

Mission AI: Interprets sensor data, maps terrain, provides real-time tactics

-

Health AI: Monitors operator condition, triggers rest or auto-treatment protocols

-

Communication AI: Synchronizes with team units, voice channels, and base HQ

Wearables can function autonomously for short missions or under full command sync.

🧭 Use Cases Across Domains

| Sector | Wearable Use |

|---|---|

| Military | Recon mapping (helmet), terrain movement (shoes), combat tool access (belt) |

| Police | Non-lethal restraint deployment (belt), crowd threat detection (helmet), calm signal feedback (bracelet) |

| Firefighting | Toxic environment analysis (sensor pod), thermal navigation (helmet), AI-guided pathfinding (shoes) |

| Disaster Response | Debris lifting (armwear), evac coordination (helmet HUD), biometric triage (bracelets) |

🔐 Safety, Privacy, and Oversight

-

Override Protocols: Each wearable can be disabled manually or remotely

-

Privacy Controls: Only pre-authorized scans allowed; public mode indicators visible

-

Audit Logs: All commands, health events, and location data encrypted and stored

-

Ethical Boundaries: AI cannot take lethal action; smart belts restrict tool deployment based on situation

🛠 Maintenance, Modularity, and Upgrades

| Device | Maintenance Cycle | Upgrade Notes |

|---|---|---|

| Helmet | 500 hrs | Visor replacement, sensor recalibration |

| Shoes | 800 km | Sole and processor unit check |

| Belt | Quarterly | Battery, AI sync update |

| Bracelets | Monthly | Health sensor testing |

| External Devices | Mission-based | Swappable based on task profile |

All devices update via secure OTA (Over-the-Air) firmware with monthly ethics + mission logic patches.

🛡️ Conclusion: The Wearable Warfighter and Civilian Guardian

Human-Robot Hybrid Agents are no longer limited to full-body exosuits. They are defined by the intelligence they wear. From smart helmets that process tactical environments to shoes that calculate terrain risks in real time, each wearable is part of a cybernetic extension of human will.

These agents are not machines—they are humans with distributed cognition, modular strength, and ethical augmentation.

Human-Robot Hybrid Agents: The Future of Soldiers, Police, and Firefighters

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

🔧 Introduction: The Rise of Human-Robot Hybrid Forces

In a world where threats are evolving faster than institutions can adapt, traditional military, law enforcement, and emergency services are reaching their physical, mental, and technological limits. The future lies not in replacing humans with machines—but in merging them. Human-robot hybrid agents combine the judgment, emotion, and adaptability of humans with the precision, strength, and resilience of robotics. This synthesis delivers a next-generation frontline force equipped for extreme conditions, ethical decision-making, and autonomous support.

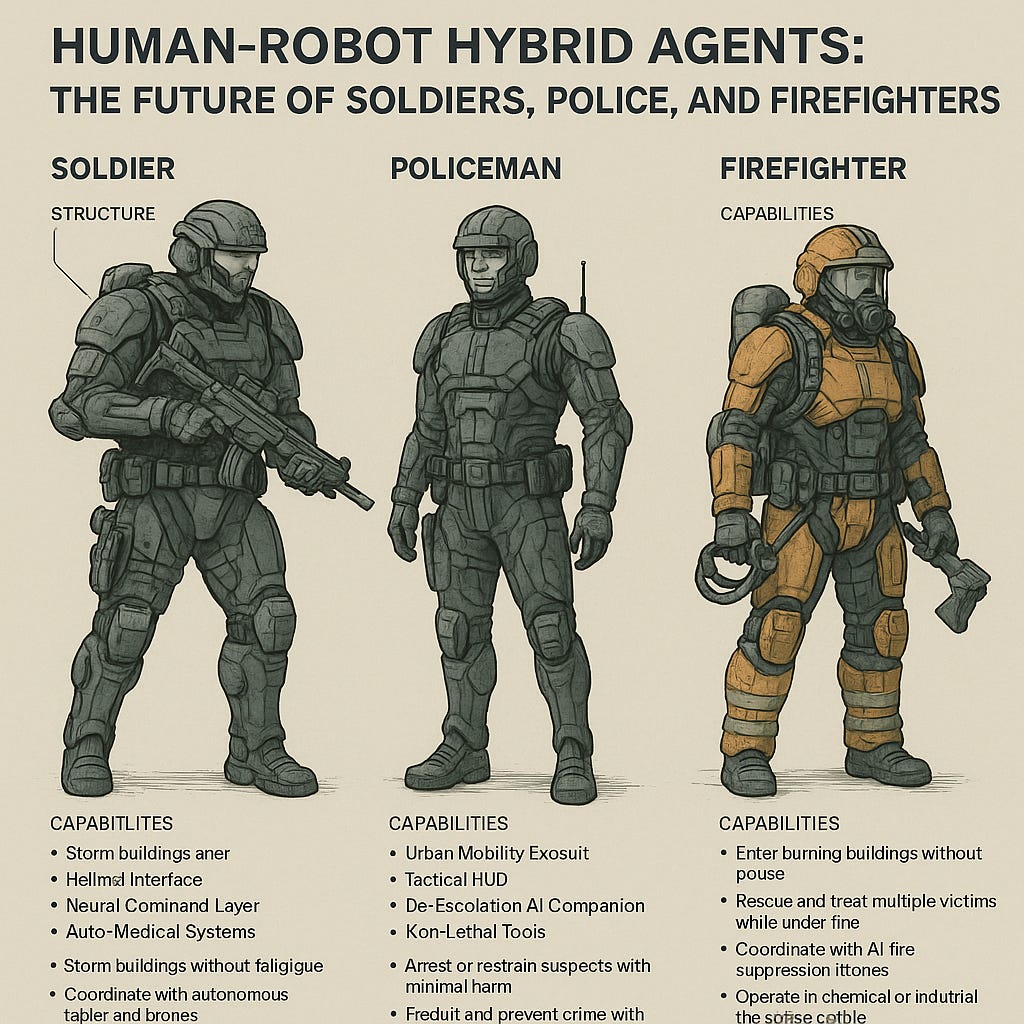

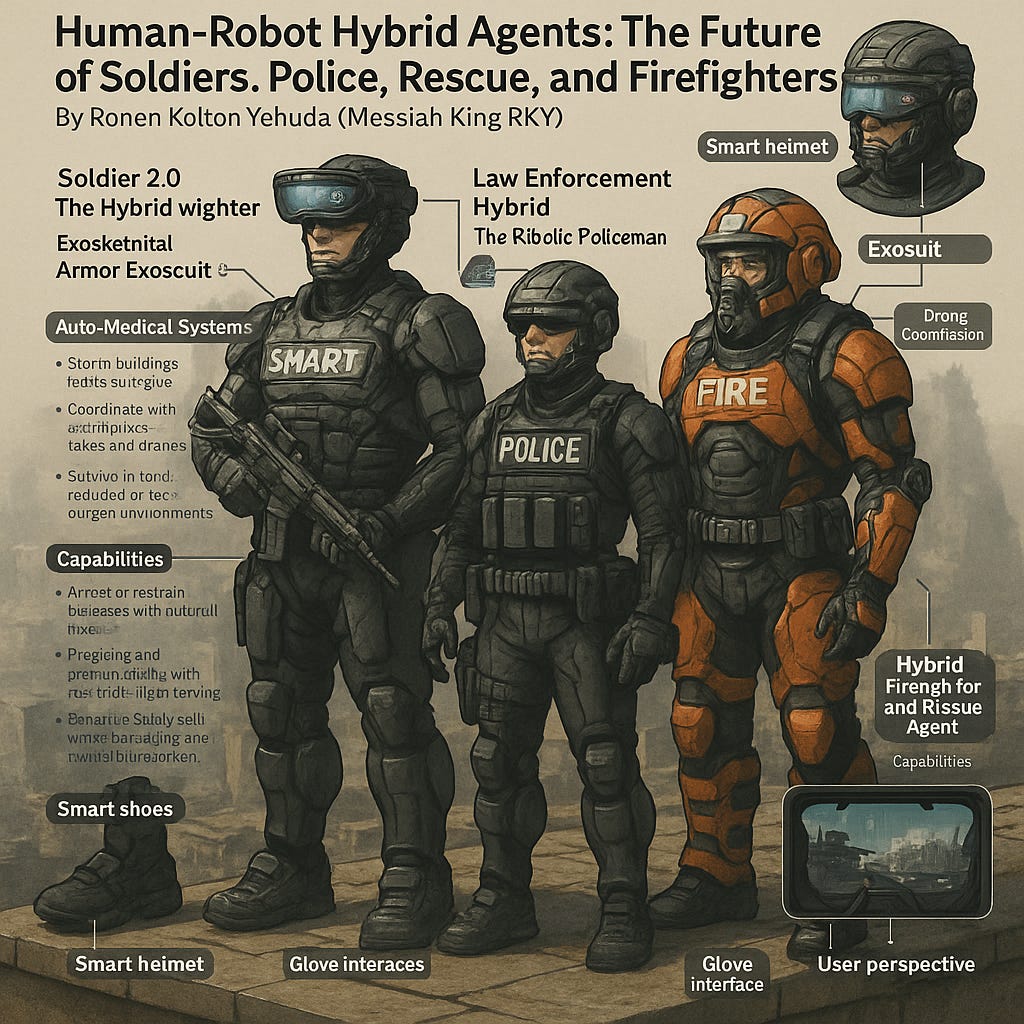

⚔️ Soldier 2.0: The Hybrid Warfighter

Structure

-

Exoskeletal Armor: A robotic exosuit enhances strength, speed, endurance, and protection. Built-in joint actuators allow soldiers to carry 5× their weight, jump higher, and move without fatigue.

-

Helmet Interface: AI-enhanced smart helmet provides real-time battlefield data, drone feeds, language translation, and target acquisition with thermal and night vision overlays.

-

Neural Command Layer: Soldiers can operate drones, fire systems, or call for support using eye-tracking, vocal triggers, or mind-to-machine interfaces.

-

Auto-Medical Systems: Embedded med units auto-administer injections, clot wounds, or monitor vitals until evacuation.

Capabilities

-

Storm buildings without fatigue

-

Coordinate with autonomous tanks and drones

-

Survive in toxic, radiated, or low-oxygen environments

-

Navigate using AI-enhanced terrain mapping

🚓 Law Enforcement Hybrid: The Robotic Policeman

Structure

-

Urban Mobility Exosuit: Designed for agility over brute force—this lighter frame supports urban patrols, crowd control, high-speed pursuits, and rapid de-escalation.

-

Tactical HUD (Heads-Up Display): Identifies threats, matches faces with national databases, and analyzes body language for predictive behavior detection.

-

De-Escalation AI Companion: Embedded AI acts as an emotional advisor, helping officers maintain calm, make fair decisions, and defuse tension based on situational modeling.

-

Non-Lethal Tools: Integrated stun systems, net launchers, shield deployment, and drone-deployed voice projection for safe crowd engagement.

Capabilities

-

Arrest or restrain suspects with minimal harm

-

Predict and prevent crime with real-time urban monitoring

-

Operate safely in riots, active shooter zones, or chemical events

-

Maintain high mobility while processing and transmitting evidence

🔥 Hybrid Firefighter: The Human-Robot Rescue Unit

Structure

-

Thermal Armor Exosuit: Shields against 1200°C+ heat, collapsing structures, and hazardous smoke. Oxygen-independent breathing systems allow extended operations.

-

Multifunctional Arms: Mechanically assisted arms break down walls, lift debris, and carry multiple victims simultaneously.

-

Drone Coordination Suite: Each firefighter commands aerial and ground drones for reconnaissance, water targeting, or structure scanning.

-

Environmental AI: Predicts fire paths, calculates collapse risks, and identifies safest entry/exit paths using building blueprints and heat flow sensors.

Capabilities

-

Enter burning buildings without pause

-

Rescue and treat multiple victims while under fire

-

Coordinate with AI fire suppression drones

-

Operate in chemical or industrial fire zones safely

🤖 Ethics, Oversight, and Command

All hybrid agents must operate under strict command protocols, human oversight, and legal frameworks:

-

Override Controls: Officers and commanders retain kill-switch and decision veto rights.

-

Behavioral Governance: Built-in AI must follow strict ethics logic trees, prioritizing life, law, and proportionality.

-

Audit Logs: Every action is recorded, encrypted, and reviewed post-mission to ensure accountability.

🧠 Training and Integration

Training for human-robot hybrid agents includes:

-

Physical adaptability to suit systems

-

Cyber-ethical decision-making

-

Real-time AI feedback usage

-

Cross-functional cooperation with drones and smart infrastructure

🌍 Civil and Military Dual Use

These hybrid agents are not limited to warzones or emergencies—they are essential for:

-

Natural disaster response

-

Border patrol and anti-terror surveillance

-

Critical infrastructure protection

-

Crowd safety in major events

🛡️ Conclusion: Humanity Augmented, Not Replaced

Robots alone can’t understand context. Humans alone can’t keep pace with modern dangers. But together—through respectful, ethical augmentation—society can create a new protective force that is faster, stronger, safer, and smarter.

Technical Framework for Human-Robot Hybrid Agents Equipped with Smart Wearables and Bionic Enhancements

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

Abstract

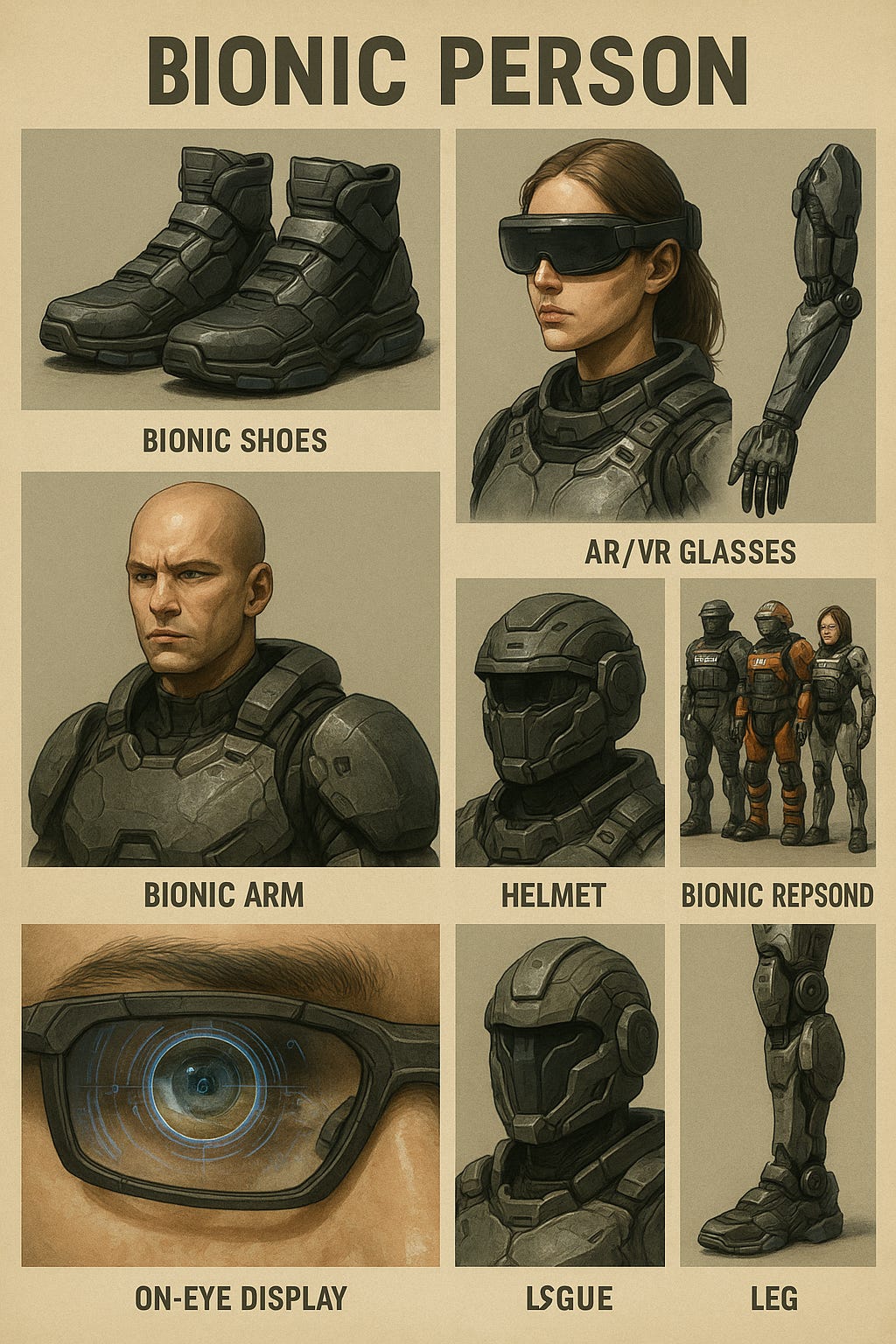

This article presents a comprehensive technical framework for the development and deployment of Human-Robot Hybrid Agents (HRHAs) enhanced with smart wearables, bionic systems, and embedded AI interfaces. These agents are designed for military, law enforcement, firefighting, and emergency rescue roles. By integrating exoskeletal augmentation, smart shoes, AR/VR glasses, advanced helmets, and neural interfaces, HRHAs represent a new paradigm of ethical augmentation where human cognition remains in command while robotics provide physical and informational superiority.

1. Introduction: Human-Machine Synergy

Human-Robot Hybrid Agents bridge the gap between fully autonomous robots and traditional personnel by empowering humans with modular bionic enhancements. Unlike autonomous robots, HRHAs preserve human decision-making authority while enabling superhuman endurance, data processing, and tactical coordination across dangerous or dynamic environments.

2. Architecture Overview

2.1 Core Subsystems

| Layer | Components | Purpose |

|---|---|---|

| Human Operator | Biological decision-maker | Ethical, strategic, and situational control |

| Exoskeletal Frame | Robotic limbs, joint motors, spinal support | Strength, protection, and movement extension |

| Bionic Interface Layer | Smart shoes, haptic gloves, AR/VR glasses | Augmented mobility and environmental feedback |

| AI Core & Sensors | Embedded computing and vision/sound/smell sensors | Real-time analysis, threat prediction, and navigation |

| Communication Suite | Encrypted mesh, SIM, and satellite connectivity | Team sync, cloud support, and command relay |

3. Key Wearable Technologies

3.1 Smart Shoes

-

Sensors: Gait recognition, ground material feedback, balance diagnostics

-

Computing: Embedded microchip with real-time path prediction and terrain analysis

-

Features: Self-adjusting sole pressure, vibration cues for directional navigation, integrated GPS tracking

3.2 AR/VR Smart Glasses

-

Display: Transparent AR overlays + immersive VR mode

-

Data Feed: Tactical maps, facial recognition, thermal overlays, and body health vitals

-

Interfaces: Eye-tracking controls, audio relay, team-based HUD sharing

3.3 AI-Enabled Helmet

-

Protection: Ballistic-grade with anti-radiation and smart airflow

-

Interface: Voice commands, neural-triggered HUD control, external threat detection

-

Modularity: Interchangeable visors (sunlight, night, tactical blackout), noise-canceling sensors, gesture relay

4. Mechanical & Functional Enhancements

4.1 Exosuit Features

| Feature | Details |

|---|---|

| Load Support | Carry up to 300 kg of weight with full agility |

| Joint Actuation | 6–8 degrees of freedom per limb, powered by servo motors |

| Auto-Stabilization | Gyroscopic feedback for stair, rooftop, and rubble navigation |

| Battery Life | 4–8 hours of operation with hot-swappable cells |

4.2 Arm Tools & Bionic Limbs

-

Hydraulic-boosted arms for lifting, gripping, breaching

-

Tactile feedback in gloves for rescue operations

-

Integrated tasers or non-lethal projectiles (law enforcement units)

-

Medical-grade precision grip modes for surgery or triage

5. AI and Control Interface

5.1 Intelligence Modes

| Mode | Description |

|---|---|

| Advisory | AI suggests actions; human executes |

| Shared Control | AI performs secondary functions (drones, weapon stabilization) |

| Conditional Autonomy | Short-term mission logic during blackouts or injury |

5.2 Embedded AI Modules

-

Tactical AI: Real-time battlefield or urban threat modeling

-

Rescue AI: Structural collapse prediction, victim heat signatures

-

De-escalation AI: Body language and crowd tension monitoring

-

Health AI: Monitors operator fatigue, vitals, emotional state

6. Role-Specific Adaptations

6.1 Military Hybrid

-

EMP-resistant armor

-

UAV swarm coordination

-

Weapon lock-on and recoil balancing

-

Enhanced desert/night vision

6.2 Law Enforcement Hybrid

-

Smart restraint tools (foam, net, electric tether)

-

Civilian voice modulator for public announcements

-

Live evidence recording with metadata time stamps

6.3 Firefighter and Rescue Hybrid

-

Oxygen-independent breathing with built-in filtration

-

Debris removal claws, stretcher deployment arm

-

Temperature-regulated inner suit with anti-burn gel

-

Augmented pathfinding in dense smoke or water

7. Communications & Cloud Integration

-

Data Security: AES-256 and quantum-encryption ready

-

Protocol Stack: Wi-Fi 6E, 5G/6G, fallback LoRa mesh

-

Cloud Command: Continuous sync with smart city infrastructure, hospitals, or military servers

-

Remote Diagnostics: Full self-diagnostics dashboard including health, battery, and situational logs

8. Maintenance, Upgrades, and Ethics

| Component | Maintenance Cycle | Notes |

|---|---|---|

| Exosuit Joints | 1000 hours | Joint torque calibration and seal replacement |

| Battery Units | 500–800 cycles | Replace or recharge in field |

| Sensors | Auto-diagnose | Manual recalibration every 3 months |

| AI Firmware | OTA every 30 days | Includes ethics and threat modeling updates |

Embedded Ethical Controls

-

Pre-coded rules of engagement

-

Force authorization request layers

-

Transparency indicators: public mode light, camera mirror feed

-

Override triggers: remote or biometric

9. Deployment Strategy

-

Phase I: Elite urban SWAT teams, special forces, disaster relief pilots

-

Phase II: Regional rollouts across national security and public safety institutions

-

Phase III: Civilian-adapted suits for fire and medical volunteer corps

-

Phase IV: Cross-border and UN-compatible units for peacekeeping and climate emergencies

10. Conclusion

Human-Robot Hybrid Agents equipped with smart shoes, AR/VR glasses, AI-integrated helmets, and wearable exoskeletons represent the future of multidomain frontline operations. These systems enable strength without brutality, data without overload, and speed without fatigue—while preserving the central role of human conscience.

They are not cyborg overlords. They are the augmented guardians of tomorrow.

Exoskeletal Armor: A robotic exosuit enhances strength, speed, endurance, and protection. Built-in joint actuators allow soldiers to carry 5× their weight, jump higher, and move without fatigue.

Helmet Interface: AI-enhanced smart helmet provides real-time battlefield data, drone feeds, language translation, and target acquisition with thermal and night vision overlays.

Neural Command Layer: Soldiers can operate drones, fire systems, or call for support using eye-tracking, vocal triggers, or mind-to-machine interfaces.

Auto-Medical Systems: Embedded med units auto-administer injections, clot wounds, or monitor vitals until evacuation.

Capabilities:

Storm buildings without fatigue

Coordinate with autonomous tanks and drones

Survive in toxic, radiated, or low-oxygen environments

Navigate using AI-enhanced terrain mapping

Urban Mobility Exosuit: Designed for agility over brute force—this lighter frame supports urban patrols, crowd control, high-speed pursuits, and rapid de-escalation.

Tactical HUD (Heads-Up Display): Identifies threats, matches faces with national databases, and analyzes body language for predictive behavior detection.

De-Escalation AI Companion: Embedded AI acts as an emotional advisor, helping officers maintain calm, make fair decisions, and defuse tension based on situational modeling.

Non-Lethal Tools: Integrated stun systems, net launchers, shield deployment, and drone-deployed voice projection for safe crowd engagement.

Capabilities:

Arrest or restrain suspects with minimal harm

Predict and prevent crime with real-time urban monitoring

Operate safely in riots, active shooter zones, or chemical events

Maintain high mobility while processing and transmitting evidence

Thermal Armor Exosuit: Shields against 1200°C+ heat, collapsing structures, and hazardous smoke. Oxygen-independent breathing systems allow extended operations.

Multifunctional Arms: Mechanically assisted arms break down walls, lift debris, and carry multiple victims simultaneously.

Drone Coordination Suite: Each firefighter commands aerial and ground drones for reconnaissance, water targeting, or structure scanning.

Environmental AI: Predicts fire paths, calculates collapse risks, and identifies safest entry/exit paths using building blueprints and heat flow sensors.

Capabilities:

Enter burning buildings without pause

Rescue and treat multiple victims while under fire

Coordinate with AI fire suppression drones

Operate in chemical or industrial fire zones safely

Override Controls: Officers and commanders retain kill-switch and decision veto rights.

Behavioral Governance: Built-in AI must follow strict ethics logic trees, prioritizing life, law, and proportionality.

Audit Logs: Every action is recorded, encrypted, and reviewed post-mission to ensure accountability.

Physical adaptability to suit systems

Cyber-ethical decision-making

Real-time AI feedback usage

Cross-functional cooperation with drones and smart infrastructure

Natural disaster response

Border patrol and anti-terror surveillance

Critical infrastructure protection

Crowd safety in major events

The future of soldiers, officers, firefighters, and rescue agents is not mechanical. It is hybrid. It is human—enhanced.

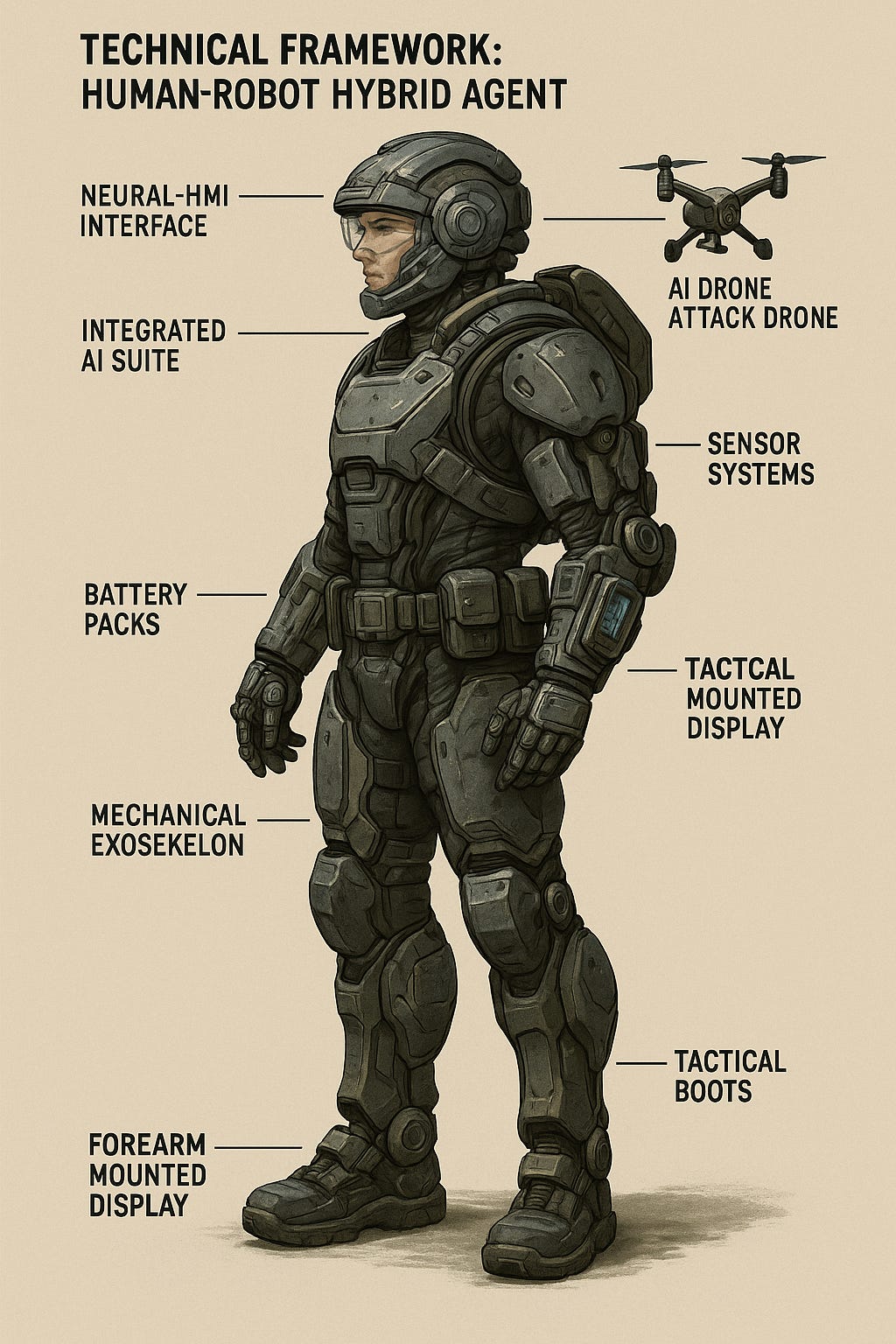

Technical Framework for Human-Robot Hybrid Agents in Defense, Law Enforcement, and Emergency Response

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

Abstract

This article outlines the architecture, systems integration, and operational design of Human-Robot Hybrid Agents (HRHAs) intended for deployment across military, policing, and firefighting domains. By combining wearable robotics, embedded AI, autonomous subsystems, and human cognitive control, HRHAs represent a new paradigm in physical augmentation and distributed operational intelligence. The article defines subsystems, input/output chains, human–machine interfaces, and role-specific adaptations.

1. Introduction

Human-Robot Hybrid Agents are wearable-integrated, AI-enhanced operational entities where the biological operator is central to decision-making, and mechanical systems provide enhanced capacity in strength, sensing, survivability, and coordination. Unlike fully autonomous robots, HRHAs maintain human ethical and strategic input while significantly extending physical and cognitive capability.

2. System Architecture Overview

2.1 Core Layers

| Layer | Function |

|---|---|

| Human Operator | Core decision-maker; responsible for ethical and legal judgment |

| Mechanical Exoskeleton | Supports strength, endurance, and locomotion under load |

| Integrated AI Suite | Provides sensor fusion, decision support, and environment mapping |

| HMI Interface | Allows seamless control of subsystems via neural, tactile, or vocal input |

| Embedded Systems & Drones | Executes autonomous sub-tasks: recon, mapping, suppression |

3. Subsystem Breakdown

3.1 Mechanical Augmentation System (MAS)

-

Actuated Joint Assemblies: Hydraulic or electric servo-controlled for 6-DoF limb movement

-

Load Management: Carbon-alloy frame distributes 150–300 kg loads across torso and limbs

-

Thermal & Impact Shielding: Fire-retardant, ballistic-rated composite surfaces

-

Power Supply: Dual-battery (Li-ion or hydrogen fuel cell), hot-swappable for continuous operation

3.2 Sensor and Perception Suite

-

Multispectral Vision: Infrared, LIDAR, UV, thermal, and visible overlays

-

Bio-Monitoring: HR, BP, oxygen, dehydration, fatigue sensors feeding both AI and medics

-

Environmental Awareness: Gas detection, radiation sensing, acoustic triangulation

-

External Feedback Loops: Real-time drone swarm data integration (up to 32 channels)

3.3 Human–Machine Interface (HMI)

-

Primary Control: Helmet-based eye-tracking, voice command, and haptic buttons

-

Secondary Input: Neural intent mapping (EMG or BCI) for rapid mode switching

-

Feedback Output: Heads-up display (HUD), haptic pulse alerts, earpiece audio

4. AI Integration and Autonomy Framework

4.1 Embedded AI Modules

-

Tactical Decision Support (military/police): Real-time enemy modeling, ballistic trajectory prediction

-

Thermal Flow Analysis (fire): Fire growth modeling, evacuation path optimization

-

Behavioral Prediction: Crowd analysis, body-language AI, aggression detection

-

Medical Intervention: Early detection of injury/fatigue, initiation of auto-treatment protocol

4.2 Autonomy Levels

| Level | Description |

|---|---|

| 0 | Human-only command |

| 1 | AI advisory (no execution) |

| 2 | Conditional autonomy (drones, environment mapping) |

| 3 | Local autonomous operations (rescue, suppression, pursuit) with human override |

5. Role-Specific Configurations

5.1 Military Hybrid Soldier

-

Armor Class: Level IV ballistic + EMP hardening

-

Systems: Integrated weapon targeting, UAV swarm control, GPS-denied navigation

-

Comms: Encrypted mesh relay with forward base and satellite fallback

-

Redundancy: Failover motion control for limb independence (operates under partial damage)

5.2 Law Enforcement Officer Hybrid

-

Systems: Facial recognition (GDPR-compliant), live evidence streaming, suspect restraint tools

-

Engagement Toolkit: Taser module, foam gun, deployable riot shield

-

Behavioral AI: Conflict de-escalation and crowd emotion modeling

-

Legal Integration: Auto-log of engagements for audit and litigation-proofing

5.3 Firefighter Hybrid Unit

-

Thermal Tolerance: Sustained operation in 1200°C environments for up to 45 minutes

-

Respiratory System: Closed-loop oxygen with active CO/CO₂ filtration

-

Payload: Lifting/carrying capacity of 3+ victims simultaneously

-

Autonomous Tools: Drone-based nozzle positioning, smoke ventilation coordination

6. Communications & Data Infrastructure

-

Protocols: AES-256 encryption, 5G/6G uplink, LoRa fallback in remote zones

-

Cloud Sync: Selective logging to mission servers or secure black boxes

-

Peer Network: Agent-to-agent real-time coordination mesh (bandwidth adaptive)

-

Security: Behavioral intrusion detection + manual override escalation tree

7. Maintenance and Lifecycle

| Component | Maintenance Cycle | Notes |

|---|---|---|

| Exosuit | 1000 hours | Joint recalibration, seal check |

| Battery Units | 500 cycles | Recharge protocol optimization |

| Sensors | Self-diagnosing | Replace if >5% drift |

| AI Core | Software updates monthly | Must include ethics compliance patching |

8. Challenges and Mitigations

-

Over-Reliance on AI: Enforced fallback to human logic in ambiguous scenarios

-

Ethical Risk: Embedded rulesets + oversight AI to ensure compliance

-

Cybersecurity: Triple-layer defense (firmware, runtime, and network encryption)

-

Fatigue: AI-monitored human health with enforced rest protocols

9. Deployment Pathway

-

Phase I: Pilot with special forces, elite police, and high-risk fire teams

-

Phase II: Expand to border patrol, riot response, urban fire units

-

Phase III: Standardization in joint military-police-response frameworks

-

Phase IV: International regulation, cross-border compatibility, civilian adaptation

10. Conclusion

Human-Robot Hybrid Agents represent a high-resilience, multi-domain toolset for future conflict, law, and disaster management. By preserving human agency while enabling robotic extension, HRHAs allow institutions to operate beyond biological constraints without compromising ethics or control. Their success lies in balanced autonomy, clear mission parameters, and embedded safety by design.

Human-Robot Hybrid Agents: The Future of Soldiers, Police, Rescue and Firefighters

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

Introduction: The Rise of Human-Robot Hybrid Forces

In a world where threats are evolving faster than institutions can adapt, traditional military, law enforcement, and emergency services are reaching their physical, mental, and technological limits. The future lies not in replacing humans with machines—but in merging them. Human-robot hybrid agents combine the judgment, emotion, and adaptability of humans with the precision, strength, and resilience of robotics. This synthesis delivers a next-generation frontline force equipped for extreme conditions, ethical decision-making, and autonomous support.

Soldier 2.0: The Hybrid Warfighter

Structure:

-

Exoskeletal Armor: A robotic exosuit enhances strength, speed, endurance, and protection. Built-in joint actuators allow soldiers to carry 5× their weight, jump higher, and move without fatigue.

-

Helmet Interface: AI-enhanced smart helmet provides real-time battlefield data, drone feeds, language translation, and target acquisition with thermal and night vision overlays.

-

Neural Command Layer: Soldiers can operate drones, fire systems, or call for support using eye-tracking, vocal triggers, or mind-to-machine interfaces.

-

Auto-Medical Systems: Embedded med units auto-administer injections, clot wounds, or monitor vitals until evacuation.

Capabilities:

-

Storm buildings without fatigue

-

Coordinate with autonomous tanks and drones

-

Survive in toxic, radiated, or low-oxygen environments

-

Navigate using AI-enhanced terrain mapping

Law Enforcement Hybrid: The Robotic Policeman

Structure:

-

Urban Mobility Exosuit: Designed for agility over brute force—this lighter frame supports urban patrols, crowd control, high-speed pursuits, and rapid de-escalation.

-

Tactical HUD (Heads-Up Display): Identifies threats, matches faces with national databases, and analyzes body language for predictive behavior detection.

-

De-Escalation AI Companion: Embedded AI acts as an emotional advisor, helping officers maintain calm, make fair decisions, and defuse tension based on situational modeling.

-

Non-Lethal Tools: Integrated stun systems, net launchers, shield deployment, and drone-deployed voice projection for safe crowd engagement.

Capabilities:

-

Arrest or restrain suspects with minimal harm

-

Predict and prevent crime with real-time urban monitoring

-

Operate safely in riots, active shooter zones, or chemical events

-

Maintain high mobility while processing and transmitting evidence

Hybrid Firefighter and Rescue Agent

Structure:

-

Thermal Armor Exosuit: Shields against 1200°C+ heat, collapsing structures, and hazardous smoke. Oxygen-independent breathing systems allow extended operations.

-

Multifunctional Arms: Mechanically assisted arms break down walls, lift debris, and carry multiple victims simultaneously.

-

Drone Coordination Suite: Each firefighter commands aerial and ground drones for reconnaissance, water targeting, or structure scanning.

-

Environmental AI: Predicts fire paths, calculates collapse risks, and identifies safest entry/exit paths using building blueprints and heat flow sensors.

Capabilities:

-

Enter burning buildings without pause

-

Rescue and treat multiple victims while under fire

-

Coordinate with AI fire suppression drones

-

Operate in chemical or industrial fire zones safely

Smart Shoes, Helmets, and AR/VR Glasses

-

Smart Shoes: Equipped with pressure sensors, GPS, and microprocessors, enabling silent movement, terrain feedback, and location tracking.

-

Smart Helmets: Integrate voice command, real-time environmental scanning, drone feeds, and audio processing.

-

AR/VR Glasses: Provide immersive situational awareness, live blueprints, mission overlays, and remote coordination with command units.

Ethics, Oversight, and Command

-

Override Controls: Officers and commanders retain kill-switch and decision veto rights.

-

Behavioral Governance: Built-in AI must follow strict ethics logic trees, prioritizing life, law, and proportionality.

-

Audit Logs: Every action is recorded, encrypted, and reviewed post-mission to ensure accountability.

Training and Integration

-

Physical adaptability to suit systems

-

Cyber-ethical decision-making

-

Real-time AI feedback usage

-

Cross-functional cooperation with drones and smart infrastructure

Civil and Military Dual Use

-

Natural disaster response

-

Border patrol and anti-terror surveillance

-

Critical infrastructure protection

-

Crowd safety in major events

Conclusion: Humanity Augmented, Not Replaced

Robots alone can’t understand context. Humans alone can’t keep pace with modern dangers. But together—through respectful, ethical augmentation—society can create a new protective force that is faster, stronger, safer, and smarter.

The future of soldiers, officers, firefighters, and rescue agents is not mechanical. It is hybrid. It is human—enhanced.

The Human-Robot Frontline: How Hybrid Agents Are Shaping the Future of Soldiers, Police, Rescue and Firefighters

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

In an era of rising global threats—from wildfires and urban crime to cyberterrorism and high-tech warfare—human strength and speed are no longer enough. At the same time, fully autonomous robots still lack the empathy, judgment, and moral reasoning needed for public safety and defense.

Enter the Human-Robot Hybrid Agent—a new class of frontline professional enhanced by wearable robotics and artificial intelligence. These hybrid soldiers, police officers, and firefighters are trained humans equipped with robotic exosuits, smart helmets, AI assistants, and high-tech tools that allow them to do what no ordinary human—or machine—could do alone.

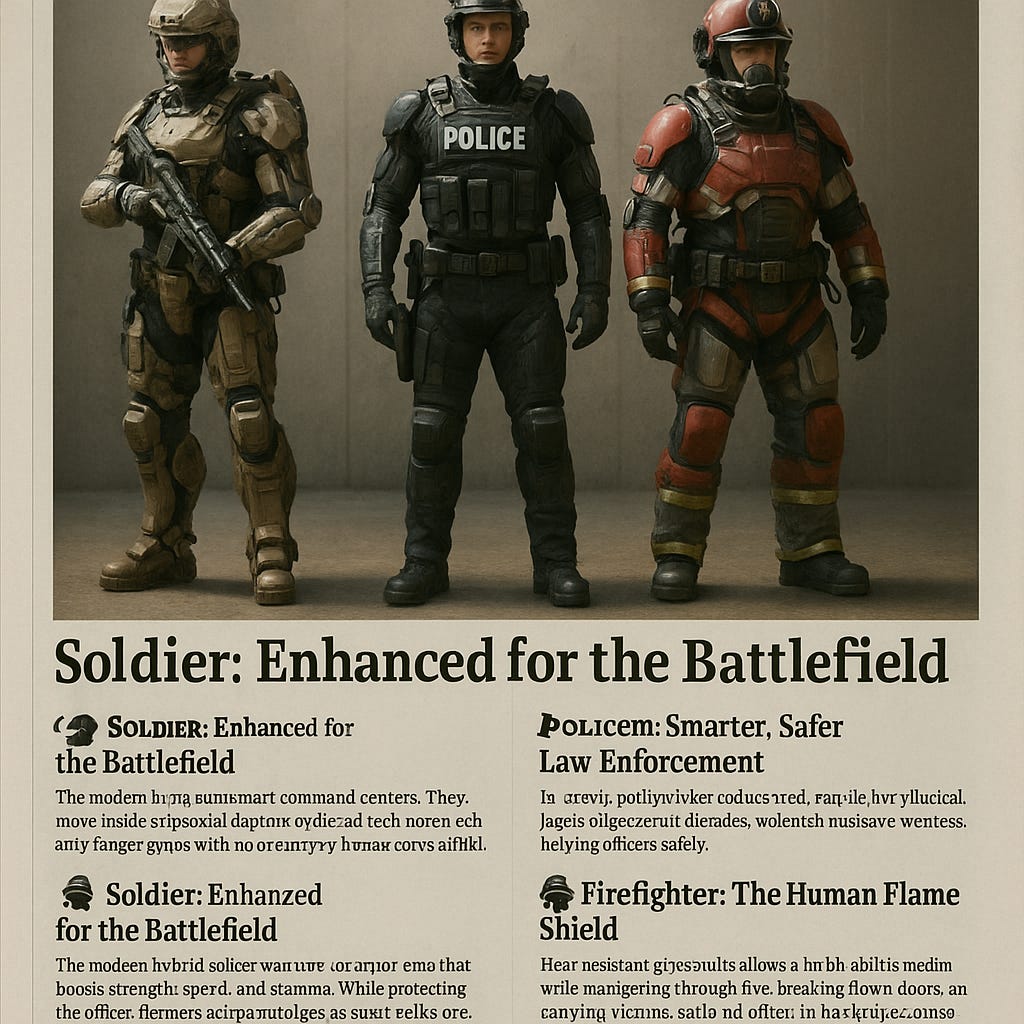

👨✈️ Soldier: Enhanced for the Battlefield

The modern hybrid soldier wears more than body armor. They move inside a robotic exosuit that boosts strength, speed, and stamina. With this technology, they can carry heavy loads, run longer distances, and enter danger zones without exhaustion.

Their helmets function as smart command centers—offering thermal imaging, drone camera feeds, live battlefield maps, and even language translation. Through voice or eye movements, they can operate drones, lock onto targets, or call for medical evacuation without ever lifting a finger.

Some systems even monitor the soldier’s vital signs and inject medication in emergencies—reducing the risk of death before help arrives.

👮 Policeman: Smarter, Safer Law Enforcement

In cities, police officers using hybrid systems wear lighter exosuits designed for flexibility and mobility. These suits assist with foot chases, physical restraint, and long patrol hours, while protecting the officer from physical harm.

A tactical heads-up display (HUD) helps identify faces, scan license plates, and detect weapons. Built-in AI companions analyze crowd behavior and suggest calming strategies in tense situations—helping officers de-escalate violence and act fairly under pressure.

Instead of lethal force, hybrid officers can use built-in non-lethal tools like electric stun systems, foam cannons, or net projectors to subdue suspects safely.

👨🚒 Firefighter: The Human Flame Shield

Firefighting is one of the most dangerous jobs in the world. Hybrid firefighter units wear heat-resistant exosuits that allow them to walk through fire, break down doors, and carry multiple victims at once.

Their suits include breathing systems, protective layers against toxic smoke, and built-in AI that maps out fire spread and structural weaknesses. Using voice commands or gestures, they can control firefighting drones, direct hoses, and locate trapped people.

In chemical spills or industrial accidents, these hybrid agents can enter hazardous zones where ordinary firefighters couldn’t survive—even without backup.

🤝 Humans and Machines: Working Together

These systems are not meant to replace human judgment. Instead, they extend human abilities while preserving human control. Officers still decide when to act. Firefighters still decide whom to save. Soldiers still decide when to fight—or not.

What changes is their capacity to move, respond, and survive in ways that were previously impossible.

🌍 A New Public Service

Hybrid agents are already being tested in select military units and high-risk rescue teams. In the future, they may become standard across public safety services—saving lives in warzones, riots, earthquakes, wildfires, and more.

The goal isn’t to turn people into machines. It’s to give them the tools they need to protect others—and themselves—in a dangerous world.

Technical Framework for Human-Robot Hybrid Agents in Defense, Law Enforcement, and Emergency Response

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

Abstract

This article outlines the architecture, systems integration, and operational design of Human-Robot Hybrid Agents (HRHAs) intended for deployment across military, policing, rescue, and firefighting domains. By combining wearable robotics, embedded AI, autonomous subsystems, and human cognitive control, HRHAs represent a new paradigm in physical augmentation and distributed operational intelligence. The article defines subsystems, input/output chains, human–machine interfaces, and role-specific adaptations.

Introduction

Human-Robot Hybrid Agents are wearable-integrated, AI-enhanced operational entities where the biological operator is central to decision-making, and mechanical systems provide enhanced capacity in strength, sensing, survivability, and coordination. Unlike fully autonomous robots, HRHAs maintain human ethical and strategic input while significantly extending physical and cognitive capability.System Architecture Overview

2.1 Core Layers

Layer Function

Human Operator Core decision-maker; responsible for ethical and legal judgment

Mechanical Exoskeleton Supports strength, endurance, and locomotion under load

Integrated AI Suite Provides sensor fusion, decision support, and environment mapping

HMI Interface Allows seamless control of subsystems via neural, tactile, or vocal input

Embedded Systems & Drones Executes autonomous sub-tasks: recon, mapping, suppressionSubsystem Breakdown

3.1 Mechanical Augmentation System (MAS)

Actuated Joint Assemblies: Hydraulic or electric servo-controlled for 6-DoF limb movement

Load Management: Carbon-alloy frame distributes 150–300 kg loads across torso and limbs

Thermal & Impact Shielding: Fire-retardant, ballistic-rated composite surfaces

Power Supply: Dual-battery (Li-ion or hydrogen fuel cell), hot-swappable for continuous operation

3.2 Sensor and Perception Suite

Multispectral Vision: Infrared, LIDAR, UV, thermal, and visible overlays

Bio-Monitoring: HR, BP, oxygen, dehydration, fatigue sensors feeding both AI and medics

Environmental Awareness: Gas detection, radiation sensing, acoustic triangulation

External Feedback Loops: Real-time drone swarm data integration (up to 32 channels)

3.3 Human–Machine Interface (HMI)

Primary Control: Helmet-based eye-tracking, voice command, and haptic buttons

Secondary Input: Neural intent mapping (EMG or BCI) for rapid mode switching

Feedback Output: Heads-up display (HUD), haptic pulse alerts, earpiece audio

AI Integration and Autonomy Framework

4.1 Embedded AI Modules

Tactical Decision Support (military/police): Real-time enemy modeling, ballistic trajectory prediction

Thermal Flow Analysis (fire): Fire growth modeling, evacuation path optimization

Behavioral Prediction: Crowd analysis, body-language AI, aggression detection

Medical Intervention: Early detection of injury/fatigue, initiation of auto-treatment protocol

4.2 Autonomy Levels

Level Description

0 Human-only command

1 AI advisory (no execution)

2 Conditional autonomy (drones, environment mapping)

3 Local autonomous operations (rescue, suppression, pursuit) with human override

Role-Specific Configurations

5.1 Military Hybrid Soldier

Armor Class: Level IV ballistic + EMP hardening

Systems: Integrated weapon targeting, UAV swarm control, GPS-denied navigation

Comms: Encrypted mesh relay with forward base and satellite fallback

Redundancy: Failover motion control for limb independence (operates under partial damage)

5.2 Law Enforcement Officer Hybrid

Systems: Facial recognition (GDPR-compliant), live evidence streaming, suspect restraint tools

Engagement Toolkit: Taser module, foam gun, deployable riot shield

Behavioral AI: Conflict de-escalation and crowd emotion modeling

Legal Integration: Auto-log of engagements for audit and litigation-proofing

5.3 Firefighter and Rescue Hybrid Unit

Thermal Tolerance: Sustained operation in 1200°C environments for up to 45 minutes

Respiratory System: Closed-loop oxygen with active CO/CO₂ filtration

Payload: Lifting/carrying capacity of 3+ victims simultaneously

Autonomous Tools: Drone-based nozzle positioning, smoke ventilation coordination

Communications & Data Infrastructure

Protocols: AES-256 encryption, 5G/6G uplink, LoRa fallback in remote zones

Cloud Sync: Selective logging to mission servers or secure black boxes

Peer Network: Agent-to-agent real-time coordination mesh (bandwidth adaptive)

Security: Behavioral intrusion detection + manual override escalation tree

Maintenance and Lifecycle

Component Maintenance Cycle Notes

Exosuit 1000 hours Joint recalibration, seal check

Battery Units 500 cycles Recharge protocol optimization

Sensors Self-diagnosing Replace if >5% drift

AI Core Software updates monthly Must include ethics compliance patchingChallenges and Mitigations

Over-Reliance on AI: Enforced fallback to human logic in ambiguous scenarios

Ethical Risk: Embedded rulesets + oversight AI to ensure compliance

Cybersecurity: Triple-layer defense (firmware, runtime, and network encryption)

Fatigue: AI-monitored human health with enforced rest protocols

Deployment Pathway

Phase I: Pilot with special forces, elite police, and high-risk fire/rescue teams

Phase II: Expand to border patrol, riot response, urban fire units

Phase III: Standardization in joint military-police-response frameworks

Phase IV: International regulation, cross-border compatibility, civilian adaptation

Conclusion

Human-Robot Hybrid Agents represent a high-resilience, multi-domain toolset for future conflict, law, rescue, and disaster management. By preserving human agency while enabling robotic extension, HRHAs allow institutions to operate beyond biological constraints without compromising ethics or control. Their success lies in balanced autonomy, clear mission parameters, and embedded safety by design.🤖 Human-Robot Hybrid Agents: The Future of Soldiers, Police, Rescue, and Firefighters

By Ronen Kolton Yehuda (Messiah King RKY), June 2025🔧 Introduction: The Rise of Human-Robot Hybrid Forces

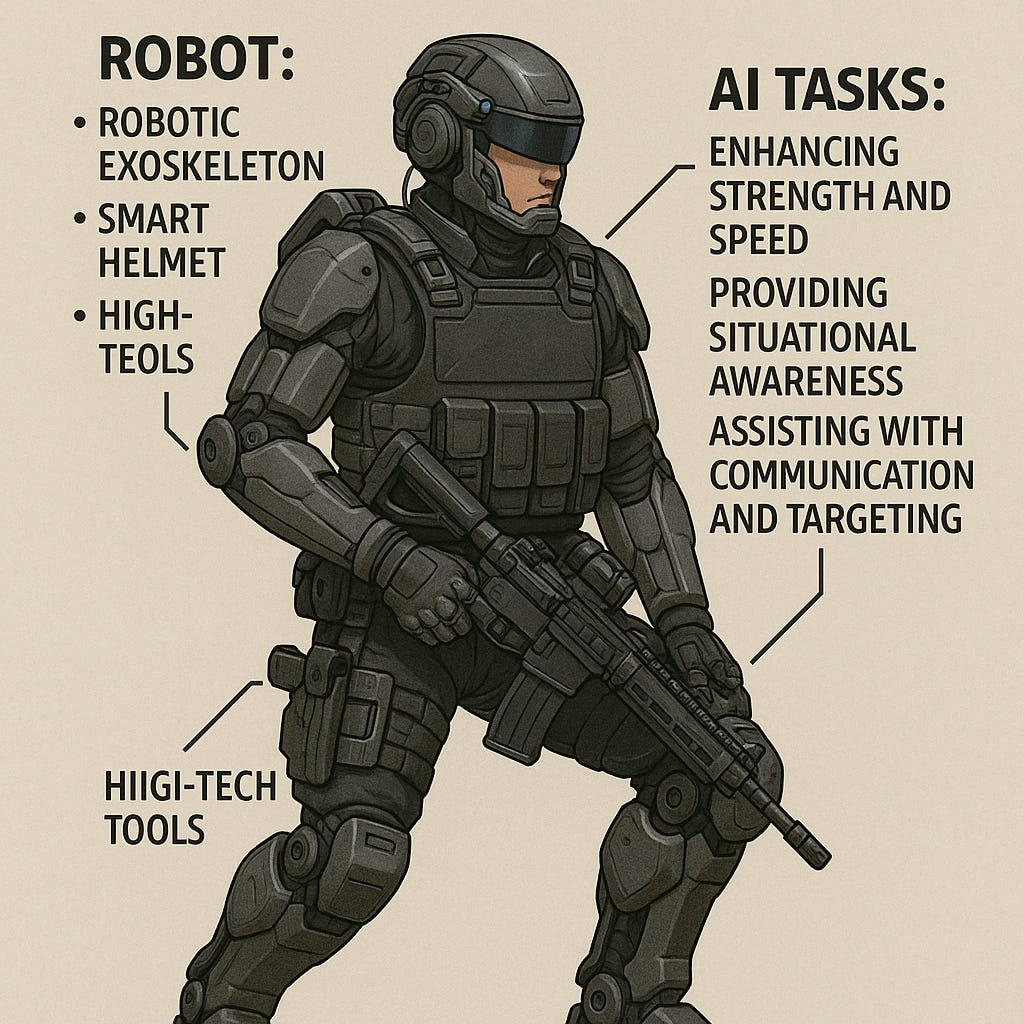

As modern threats outpace traditional response capabilities, institutions are turning to augmentation rather than replacement. Human-Robot Hybrid Agents (HRHAs) represent the next evolution in defense, law enforcement, firefighting, and rescue—merging human decision-making with robotic strength, smart wearables, and artificial intelligence. The hybrid agent is not a robot replacing humanity, but a human enhanced to meet 21st-century demands.⚔️ Soldier 2.0: The Bionic Warfighter

Structure:-

Exoskeletal Armor: Robotic frame supports up to 300 kg load, multiplies lifting power, and enhances sprint and jump capabilities.

-

Smart Combat Shoes: Embedded computing and kinetic sensors optimize balance, stride, and terrain adaptation.

-

AR/VR Hybrid Helmet: Smart visor displays battlefield overlays, real-time drone feeds, biometric stats, and translated communications.

-

Neural Interface: Brain-to-device connection via EEG/EMG or retinal/facial sensors enables hands-free weapon systems or drone control.

-

Auto-Medical Units: Real-time vitals monitoring and injectable response to trauma or toxins.

Capabilities:

-

Engage in high-intensity, low-oxygen or chemically contaminated zones

-

Use real-time AI mapping and drone swarm coordination

-

Initiate precision strikes or evacuations through thought-directed control

🚓 The Urban Peacekeeper: Hybrid Police Officer

Structure:-

Agile Mobility Exosuit: Lightweight suit designed for high-speed pursuit, agile movement, and crowd control scenarios.

-

AR Glasses or Helmet HUD: Displays ID data, facial recognition results, behavioral cues, and navigation inside buildings.

-

De-escalation AI: Emotion-modeling software helps officers interpret tone, movement, and facial expression to avoid unnecessary force.

-

Smart Gloves & Holsters: Allow rapid deployment of non-lethal tools such as stun foam, nets, and acoustic disorienters.

-

Smart Shoes with Terrain Tension Sensors: Enable silent movement, auto-balance on unstable surfaces, and emergency signal triggers.

Capabilities:

-

Patrol autonomously or alongside drones with integrated threat tracking

-

Coordinate with public surveillance and crowd modeling systems

-

Capture evidence, manage suspects, and remain accountable through AI logging and officer-assisted decision support

🔥 Firefighter-Rescuer Hybrid: The Bionic Flame Shield

Structure:-

Thermal Armor Suit: Flameproof for 1200°C, including oxygen-regenerating internal rebreather

-

Robotic Assist Arms: Pull down doors, lift rubble, and carry up to three victims simultaneously

-

VR Visor with Thermal & Structural Mapping: Identifies hottest zones, victim movement, and collapse risk

-

Smart Boots with Shock Resistance: Assist in navigating debris and maintaining footing in unstable conditions

-

Drones for Entry Mapping & Hose Positioning: Controlled via hand gestures, HUD gaze, or voice commands

Capabilities:

-

Enter blazing structures and maintain function for 30–45 minutes

-

Perform coordinated multi-victim rescues in industrial, chemical, or collapsed sites

-

Operate without backup in extreme or remote environments using remote diagnostics and situational feedback

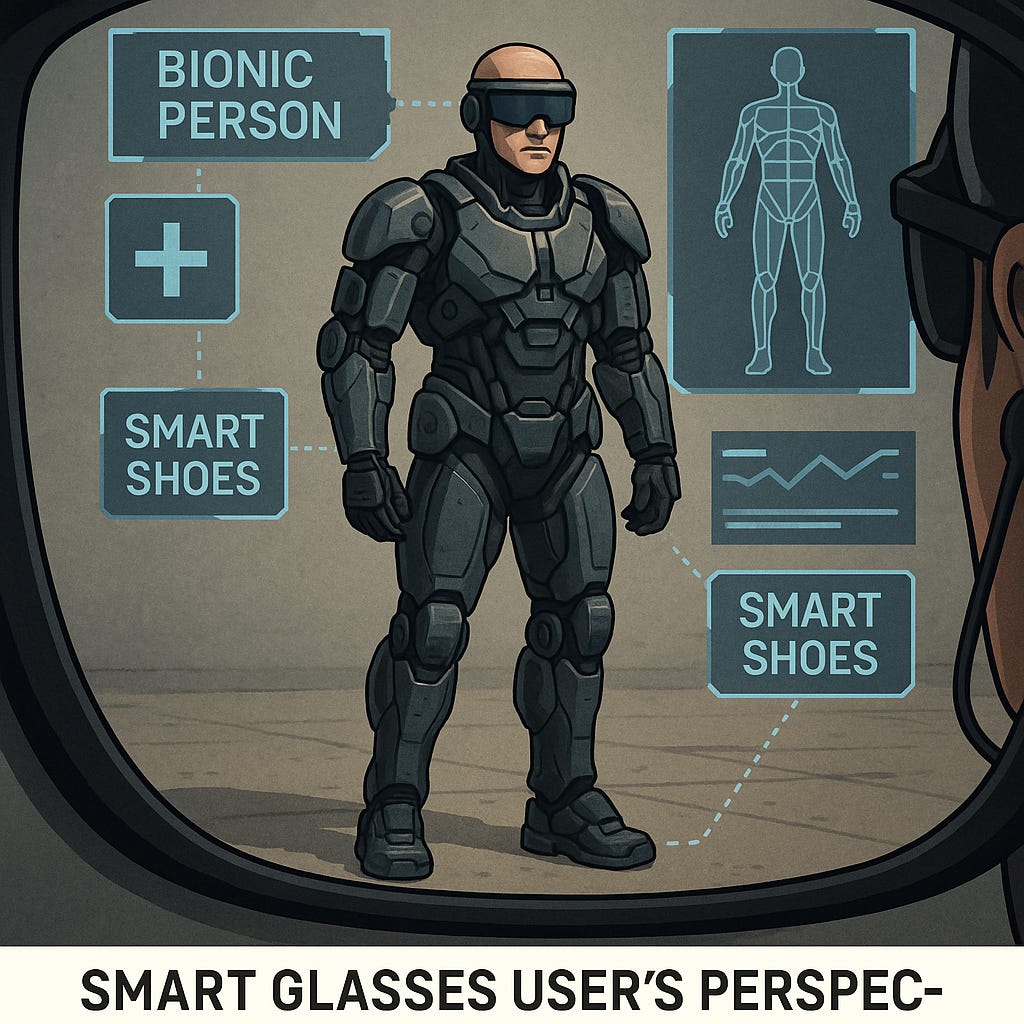

🧠 Bionic Integration: Smart Wearables and First-Person Interfaces

Every hybrid agent benefits from a seamless ecosystem of wearable intelligence:-

Smart Shoes collect kinetic and terrain data, balance feedback, and emergency biometric distress signals.

-

AR/VR Glasses and Helmets give real-time visual overlays, thermal analysis, and target data.

-

Glove Interfaces and Exosuit Joints adapt to grip type, task intensity, and autonomous or assisted operation.

-

User Perspective Systems allow agents to operate equipment using eye-tracking, voice, or neural command—even in smoke, darkness, or silence.

🤖 Ethics, Oversight, and Safety Controls

-

Override Protocols: Human supervisors always maintain kill-switch access

-

AI Guardrails: Legal, medical, and ethical constraints embedded in system code

-

Audit Trails: All actions recorded and encrypted for accountability

-

Personal Privacy Layers: Biometric systems avoid scanning bystanders unless pre-authorized

🌍 Civil & Military Dual Use

Hybrid agents are already transforming operations in:-

Natural disasters and earthquake rescues

-

Military search-and-recover units

-

Riot and protest control with non-lethal crowd handling

-

Remote firefighting in inaccessible terrain

🛡️ Conclusion: Humanity Enhanced, Not Replaced

This is not about machines taking over. This is about humans stepping into a new form—stronger, safer, faster, and better protected. The future is not robot-only or human-only. It is hybrid. It is ethical augmentation. It is human—enhanced.

-

In the Human-Robot Hybrid Agent system, each wearable unit—smart helmet, smart shoes, and AR/VR glasses—functions as a fully capable edge computing device. Each contains its own internal computing architecture, designed to operate independently or as part of a synchronized mesh. Here's how:

🪖 Smart Helmet

Role: Central command and sensory processing node.

Embedded Components:

-

CPU: Handles real-time decision-making, command inputs, and logic processing.

-

GPU: Renders AR overlays, maps, and visual enhancements; supports video feeds from drones or internal cameras.

-

RAM: For rapid data handling (4–16 GB typical).

-

Storage: Stores maps, mission plans, visual/audio logs, encrypted local data (128 GB – 1 TB SSD/NVMe).

-

OS & AI Modules: Runs localized mission AI, threat recognition, and voice/gesture interfaces.

-

Additional: Onboard cooling system, battery compartment, Wi-Fi/5G/SIM/Bluetooth mesh connectivity.

👟 Smart Shoes

Role: Movement optimization, ground intelligence, balance, and emergency response.

Embedded Components:

-

CPU: Processes gait analysis, terrain prediction, fall detection, and command execution for haptics.

-

GPU (Low-power): Visualizes 3D ground maps and real-time foot pressure visualization (when connected to HUD).

-

RAM: Stores recent activity buffer and environmental context.

-

Storage: Logs movement history, injury records, terrain data.

-

Sensors: Pressure pads, accelerometers, gyros, IMUs, shock response.

-

Other Modules: Self-tightening systems, emergency haptic alerts, GPS, noise suppression, temperature sensors.

🕶️ AR/VR Glasses

Role: Visual command interface, mission overlay, facial and object recognition.

Embedded Components:

-

CPU: Manages AR rendering logic, HUD overlays, command interpretation (via eye, hand, or voice).

-

GPU: Drives high-resolution AR/VR visuals, thermal overlays, facial recognition graphics.

-

RAM: Real-time scene loading and sensor processing.

-

Storage: Caches visual logs, preloaded mission data, operator-specific settings.

-

Additional: Cameras, microphones, retinal tracking, AI-agent display projection, encryption module.

🔗 Shared Capabilities and Network Sync

-

All devices are connected via encrypted wireless mesh (Bluetooth LE, Wi-Fi 6E, 5G/6G, or short-range LiFi).

-

Each can operate independently during disconnection or serve as a failover computing unit if one device is damaged.

-

Together, they form a distributed edge-computing network around the operator’s body.

🧠 In Effect:

Each wearable is a miniature smart computer that combines autonomy, resilience, and tactical intelligence. This decentralization allows:

-

Fault tolerance (no single point of failure)

-

Real-time adaptation (terrain, threats, stress)

-

Field autonomy without server reliance

This framework enables fully integrated hybrid agents that are more than just protected—they are computationally enhanced from the ground up.

-

🤖 Human-Robot Hybrid Agents: The Future of Soldiers, Police, Rescue, and Firefighters

🔧 Introduction: The Rise of Human-Robot Hybrid Forces

As modern threats outpace traditional response capabilities, institutions are turning to augmentation rather than replacement. Human-Robot Hybrid Agents (HRHAs) represent the next evolution in defense, law enforcement, firefighting, and rescue—merging human decision-making with robotic strength, smart wearables, and artificial intelligence. The hybrid agent is not a robot replacing humanity, but a human enhanced to meet 21st-century demands.⚔️ Soldier 2.0: The Bionic Warfighter

Structure:-

Exoskeletal Armor: Robotic frame supports up to 300 kg load, multiplies lifting power, and enhances sprint and jump capabilities.

-

Smart Combat Shoes: Embedded computing and kinetic sensors optimize balance, stride, and terrain adaptation.

-

AR/VR Hybrid Helmet: Smart visor displays battlefield overlays, real-time drone feeds, biometric stats, and translated communications.

-

Neural Interface: Brain-to-device connection via EEG/EMG or retinal/facial sensors enables hands-free weapon systems or drone control.

-

Auto-Medical Units: Real-time vitals monitoring and injectable response to trauma or toxins.

Capabilities:

-

Engage in high-intensity, low-oxygen or chemically contaminated zones

-

Use real-time AI mapping and drone swarm coordination

-

Initiate precision strikes or evacuations through thought-directed control

🚓 The Urban Peacekeeper: Hybrid Police Officer

Structure:-

Agile Mobility Exosuit: Lightweight suit designed for high-speed pursuit, agile movement, and crowd control scenarios.

-

AR Glasses or Helmet HUD: Displays ID data, facial recognition results, behavioral cues, and navigation inside buildings.

-

De-escalation AI: Emotion-modeling software helps officers interpret tone, movement, and facial expression to avoid unnecessary force.

-

Smart Gloves & Holsters: Allow rapid deployment of non-lethal tools such as stun foam, nets, and acoustic disorienters.

-

Smart Shoes with Terrain Tension Sensors: Enable silent movement, auto-balance on unstable surfaces, and emergency signal triggers.

Capabilities:

-

Patrol autonomously or alongside drones with integrated threat tracking

-

Coordinate with public surveillance and crowd modeling systems

-

Capture evidence, manage suspects, and remain accountable through AI logging and officer-assisted decision support

🔥 Firefighter-Rescuer Hybrid: The Bionic Flame Shield

Structure:-

Thermal Armor Suit: Flameproof for 1200°C, including oxygen-regenerating internal rebreather

-

Robotic Assist Arms: Pull down doors, lift rubble, and carry up to three victims simultaneously

-

VR Visor with Thermal & Structural Mapping: Identifies hottest zones, victim movement, and collapse risk

-

Smart Boots with Shock Resistance: Assist in navigating debris and maintaining footing in unstable conditions

-

Drones for Entry Mapping & Hose Positioning: Controlled via hand gestures, HUD gaze, or voice commands

Capabilities:

-

Enter blazing structures and maintain function for 30–45 minutes

-

Perform coordinated multi-victim rescues in industrial, chemical, or collapsed sites

-

Operate without backup in extreme or remote environments using remote diagnostics and situational feedback

🧠 Bionic Integration: Smart Wearables and First-Person Interfaces

Every hybrid agent benefits from a seamless ecosystem of wearable intelligence:-

Smart Shoes collect kinetic and terrain data, balance feedback, and emergency biometric distress signals.

-

AR/VR Glasses and Helmets give real-time visual overlays, thermal analysis, and target data.

-

Glove Interfaces and Exosuit Joints adapt to grip type, task intensity, and autonomous or assisted operation.

-

User Perspective Systems allow agents to operate equipment using eye-tracking, voice, or neural command—even in smoke, darkness, or silence.

🪖 Smart Helmets and Tactical Hats

Hybrid agents wear context-specific headgear designed for protection, sensory input, and mission control:-

Smart Helmets: Used in combat, riot, or fire zones. Provide ballistic or thermal protection, HUD overlays, AI-linked drone control, voice commands, eye-tracking, and audio processing. They serve as the central tactical node. Smart helmets often contain their own CPU and GPU modules, enabling edge processing, real-time data fusion, and direct connectivity to other wearables including smart shoes, gloves, belts, and AR glasses. They can function as partial command hubs even without central network links.

-

Smart Hats: Lightweight and discrete. Used in low-risk, rescue, or surveillance roles. Include directional mics, micro-cameras, AR bands, and silent communication systems. Advanced versions may include onboard processors and short-range mesh networking, allowing local AI assistance and integration with other wearables for coordinated action. Ideal for comfort, agility, and civilian interaction scenarios.

🤖 Ethics, Oversight, and Safety Controls

-

Override Protocols: Human supervisors always maintain kill-switch access

-

AI Guardrails: Legal, medical, and ethical constraints embedded in system code

-

Audit Trails: All actions recorded and encrypted for accountability

-

Personal Privacy Layers: Biometric systems avoid scanning bystanders unless pre-authorized

🌍 Civil & Military Dual Use

Hybrid agents are already transforming operations in:-

Natural disasters and earthquake rescues

-

Military search-and-recover units

-

Riot and protest control with non-lethal crowd handling

-

Remote firefighting in inaccessible terrain

🛡️ Conclusion: Humanity Enhanced, Not Replaced

This is not about machines taking over. This is about humans stepping into a new form—stronger, safer, faster, and better protected. The future is not robot-only or human-only. It is hybrid. It is ethical augmentation. It is human—enhanced.-

By Ronen Kolton Yehuda (Messiah King RKY), June 2025🤖 Human-Robot Hybrid Agents: The Future of Soldiers, Police, Rescue, and Firefighters

🔧 Introduction: The Rise of Human-Robot Hybrid Forces

As modern threats outpace traditional response capabilities, institutions are turning to augmentation rather than replacement. Human-Robot Hybrid Agents (HRHAs) represent the next evolution in defense, law enforcement, firefighting, and rescue—merging human decision-making with robotic strength, smart wearables, and artificial intelligence. The hybrid agent is not a robot replacing humanity, but a human enhanced to meet 21st-century demands.⚔️ Soldier 2.0: The Bionic Warfighter

Structure:-

Exoskeletal Armor: Robotic frame supports up to 300 kg load, multiplies lifting power, and enhances sprint and jump capabilities.

-

Smart Combat Shoes: Embedded computing and kinetic sensors optimize balance, stride, and terrain adaptation.

-

AR/VR Hybrid Helmet: Smart visor displays battlefield overlays, real-time drone feeds, biometric stats, and translated communications.

-

Neural Interface: Brain-to-device connection via EEG/EMG or retinal/facial sensors enables hands-free weapon systems or drone control.

-

Auto-Medical Units: Real-time vitals monitoring and injectable response to trauma or toxins.

Capabilities:

-

Engage in high-intensity, low-oxygen or chemically contaminated zones

-

Use real-time AI mapping and drone swarm coordination

-

Initiate precision strikes or evacuations through thought-directed control

🚓 The Urban Peacekeeper: Hybrid Police Officer

Structure:-

Agile Mobility Exosuit: Lightweight suit designed for high-speed pursuit, agile movement, and crowd control scenarios.

-

AR Glasses or Helmet HUD: Displays ID data, facial recognition results, behavioral cues, and navigation inside buildings.

-

De-escalation AI: Emotion-modeling software helps officers interpret tone, movement, and facial expression to avoid unnecessary force.

-

Smart Gloves & Holsters: Allow rapid deployment of non-lethal tools such as stun foam, nets, and acoustic disorienters.

-

Smart Shoes with Terrain Tension Sensors: Enable silent movement, auto-balance on unstable surfaces, and emergency signal triggers.

Capabilities:

-

Patrol autonomously or alongside drones with integrated threat tracking

-

Coordinate with public surveillance and crowd modeling systems

-

Capture evidence, manage suspects, and remain accountable through AI logging and officer-assisted decision support

🔥 Firefighter-Rescuer Hybrid: The Bionic Flame Shield

Structure:-

Thermal Armor Suit: Flameproof for 1200°C, including oxygen-regenerating internal rebreather

-

Robotic Assist Arms: Pull down doors, lift rubble, and carry up to three victims simultaneously

-

VR Visor with Thermal & Structural Mapping: Identifies hottest zones, victim movement, and collapse risk

-

Smart Boots with Shock Resistance: Assist in navigating debris and maintaining footing in unstable conditions

-

Drones for Entry Mapping & Hose Positioning: Controlled via hand gestures, HUD gaze, or voice commands

Capabilities:

-

Enter blazing structures and maintain function for 30–45 minutes

-

Perform coordinated multi-victim rescues in industrial, chemical, or collapsed sites

-

Operate without backup in extreme or remote environments using remote diagnostics and situational feedback

🧠 Bionic Integration: Smart Wearables and First-Person Interfaces

Every hybrid agent benefits from a seamless ecosystem of wearable intelligence:-

Smart Shoes collect kinetic and terrain data, balance feedback, and emergency biometric distress signals.

-

AR/VR Glasses and Helmets give real-time visual overlays, thermal analysis, and target data.

-

Glove Interfaces and Exosuit Joints adapt to grip type, task intensity, and autonomous or assisted operation.

-

User Perspective Systems allow agents to operate equipment using eye-tracking, voice, or neural command—even in smoke, darkness, or silence.

🪖 Smart Helmets and Tactical Hats

Hybrid agents wear context-specific headgear designed for protection, sensory input, and mission control:-

Smart Helmets: Used in combat, riot, or fire zones. Provide ballistic or thermal protection, HUD overlays, AI-linked drone control, voice commands, eye-tracking, and audio processing. They serve as the central tactical node. Smart helmets often contain their own CPU and GPU modules, enabling edge processing, real-time data fusion, and direct connectivity to other wearables including smart shoes, gloves, belts, and AR glasses. They can function as partial command hubs even without central network links. They also include temperature control units, bullet/fire/disaster resistance, and optional connectivity to external aviation suits.

-

Smart Hats: Lightweight and discrete. Used in low-risk, rescue, or surveillance roles. Include directional mics, micro-cameras, AR bands, and silent communication systems. Advanced versions may include onboard processors and short-range mesh networking, allowing local AI assistance and integration with other wearables for coordinated action. Ideal for comfort, agility, and civilian interaction scenarios.

🤖 Ethics, Oversight, and Safety Controls

-

Override Protocols: Human supervisors always maintain kill-switch access

-

AI Guardrails: Legal, medical, and ethical constraints embedded in system code

-

Audit Trails: All actions recorded and encrypted for accountability

-

Personal Privacy Layers: Biometric systems avoid scanning bystanders unless pre-authorized

🌍 Civil & Military Dual Use

Hybrid agents are already transforming operations in:-

Natural disasters and earthquake rescues

-

Military search-and-recover units

-

Riot and protest control with non-lethal crowd handling

-

Remote firefighting in inaccessible terrain

🛡️ Conclusion: Humanity Enhanced, Not Replaced

This is not about machines taking over. This is about humans stepping into a new form—stronger, safer, faster, and better protected. The future is not robot-only or human-only. It is hybrid. It is ethical augmentation. It is human—enhanced.By Ronen Kolton Yehuda (Messiah King RKY), June 2025

-

Legal Statement for Intellectual Property and Collaboration

Author: Ronen Kolton Yehuda (MKR: Messiah King RKY)**

The concepts, structures, and written formulations of the Hybrid Human-Robot Agent Systems, including Exoskeleton Platforms, Smart Wearables, Smart Glasses, Smart Shoes, Smart Helmets and Hats, and related human-robot integration frameworks, are the original innovation and intellectual property of Ronen Kolton Yehuda (MKR: Messiah King RKY).

This statement affirms authorship and creative development of these systems as integrated human-robot solutions combining robotics, artificial intelligence, wearable computing, and exoskeletal design for defense, rescue, industrial, medical, and civilian applications.

The author does not claim ownership over general scientific or engineering principles that are public knowledge, but affirms originality and authorship of the concepts, integration models, terminology, and written expression presented in these works.

The author welcomes lawful collaboration, licensing, and partnership proposals related to these technologies, provided that intellectual property rights, authorship acknowledgment, and ethical research standards are fully respected.

AR/VR Hybrid Smart Glasses: A Dual-Purpose Revolution

Smart Shoes: Revolutionizing the Future of Footwear and Technology

Smart Ankle & Smart Insole: A New Era inFootwear Technology

SmartSole/ Smart Unit for Soles/Shoes OS V1 of Villan | by Ronen Kolton Yehuda | Medium

The Future of Smart Wearables: A New Era of Connected Technology

Hybrid Human-Robot Agent Systems: Exoskeletal Technologies for Security, Rescue, and Human Care

Published by MBR: Messiah King RKY (Ronen Kolton Yehuda)

📘 Blogs:

Comments

Post a Comment